HTTP Resource

Performance characteristics of SOAP and REST are closely tied to the performance of the HTTP implementation in TIBCO ActiveMatrix BusinessWorks™.

In some situations, you can alter the configuration of the HTTP server that receives incoming HTTP requests for ActiveMatrix BusinessWorks™. There are two thread pools that can be tuned or set to appropriate values for handling the incoming concurrent requests efficiently.

- Acceptor Threads – These are the Jetty server threads. Acceptor threads are HTTP socket threads for an HTTP Connector resource that accept the incoming HTTP requests. While tuning these threads, the rule of thumb, as per the Jetty documentation, is acceptors > =1<=#CPUs.

- Queued Thread Pool - The Queued Thread Pool (QTP) uses the default job queue configuration. The QTP threads accept the requests from the Acceptor Threads.

- Minimum QTP Threads: The minimum number of QTP threads available for incoming HTTP requests. The HTTP server creates the number of threads specified by this parameter when it starts up. The default value is ten.

- Maximum QTP Threads: The maximum number of threads available for incoming HTTP requests. The HTTP server will not create more than the number of threads specified by this parameter. The default value is seventy-five. This limit is useful for determining number of incoming requests that can be processed at a time. Setting a high number will create that many threads and drastically reduce the performance.

It is recommended that if you have a large number of incoming requests, you can change these values to handle more incoming requests concurrently. You can also increase these numbers only if you have a higher peak concurrent request requirement, and you have large enough hardware resources to meet the requests.

In addition to the QTP threads, REST services also include the bw-flowlimit-executor-provider threadpool, which is a part of the Jersey framework. The QTP threads offload the work to bw-flowlimit-executor-provider threads. These threads handle the processing of the RESTful services. The core pool size of these threads is equivalent to the min QTP thread pool, that is the settings are picked from the values provided for the QTP pool on the HTTP Connector resource. The pool size values cannot be modified directly, which means that if users want to modify the thread settings, they need to change the QTP thread pool values.

All the incoming requests are processed by one of the available Jersey threads. If in case the Jersey thread is not available, the request is queued (based on blocking queue size), and this holds true until the Queue gets filled up. If the load persists continuously and the Queue is already full, then the Jersey threads are scaled up to maxQTP(in other words, Jersey maxPoolSize), that is the setting are picked from the values provided for the QTP pool on the HTTP Connector resource.

The bw-flowlimit-executor-provider threads are created for every service deployed in the application EAR. If there are 10 services deployed in a single EAR, on a single appnode container, then the bw-flowlimit-executor-provider threads created would be equal to the number of services deployed that is (10) * CorePoolSize.

For more information about configuration details of the parameters, see Tuning Parameters

Sample Test

Scenarios Under Test: Scenario 1 is a simple HTTP Receiver with HTTP Response and echo implementation.

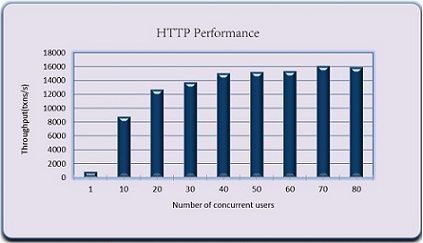

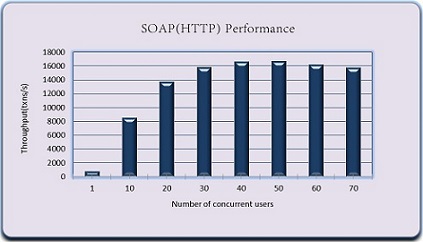

The graphs below illustrate the HTTP and SOAP (HTTP) service performance in terms of throughput scalability with increasing concurrent users for the given hardware. For more information, see Hardware Configuration.

For scenario details, see Appendix A.