Vertical Scaling

Vertically scaling an application changes the memory limit that container platform such as Docker, Pivotal Platform, Kubernetes applies to all instances of the application. We can deploy multiple applications with one cf push command by describing them in a single manifest. For doing this we need to look into the directory structure and path lines in the manifest. We can also define the no of instances and memory allocation parameters in the manifest file which will be used while deploying the application.

Docker:

For Docker, the vertical scaling is achieved by increasing the memory allocated for the container as well as increasing the number of CPU's defined for the container.

Use docker run -m MEMORY to change the memory limit applied to the container of your application. Memory must be an integer followed by either an M, for megabytes, or G, for gigabytes.

Use docker run -cpus 2.00 to change the number of CPUs allocated for that container. Number is a fractional number. 0.000 means no limit. If you do not specify this parameter it will use all the CPUs available on that host/instance where the container is running.

docker run -m 1024M -cpus 2.0 -p <Host_ApplicationPortToExpose>:<Container_ApplicationPort> <ApplicationImageName>

Kubernetes:

containers: resources: limits: cpu: 0.5 memory: 512Mi requests: cpu: 0.5 memory: 512Mi

Kubernetes schedules a Pod to run on a Node only if the Node has enough CPU and RAM available to satisfy the total CPU and RAM requested by all of the containers in the Pod. Also, as a container runs on a Node, Kubernetes doesn't allow the CPU and RAM consumed by the container to exceed the limits you specify for the container. If a Container exceeds its RAM limit, it dies from an out-of-memory condition. If a container exceeds its CPU limit, it becomes a candidate for having its CPU use throttled.

You can improve reliability by specifying a value that is a little higher than what you expect to use. If you specify a request, a Pod is guaranteed to be able to use that much of the resource.

If you don't specify a RAM limit, Kubernetes places no upper bound on the amount of RAM a Container can use. A Container could use the entire RAM available on the Node where the Container is running. Similarly, if you don't specify a CPU limit, Kubernetes places no upper bound on CPU resources, and a Container could use all of the CPU resources available on the Node. In this you do not need to do the vertical auto scaling.

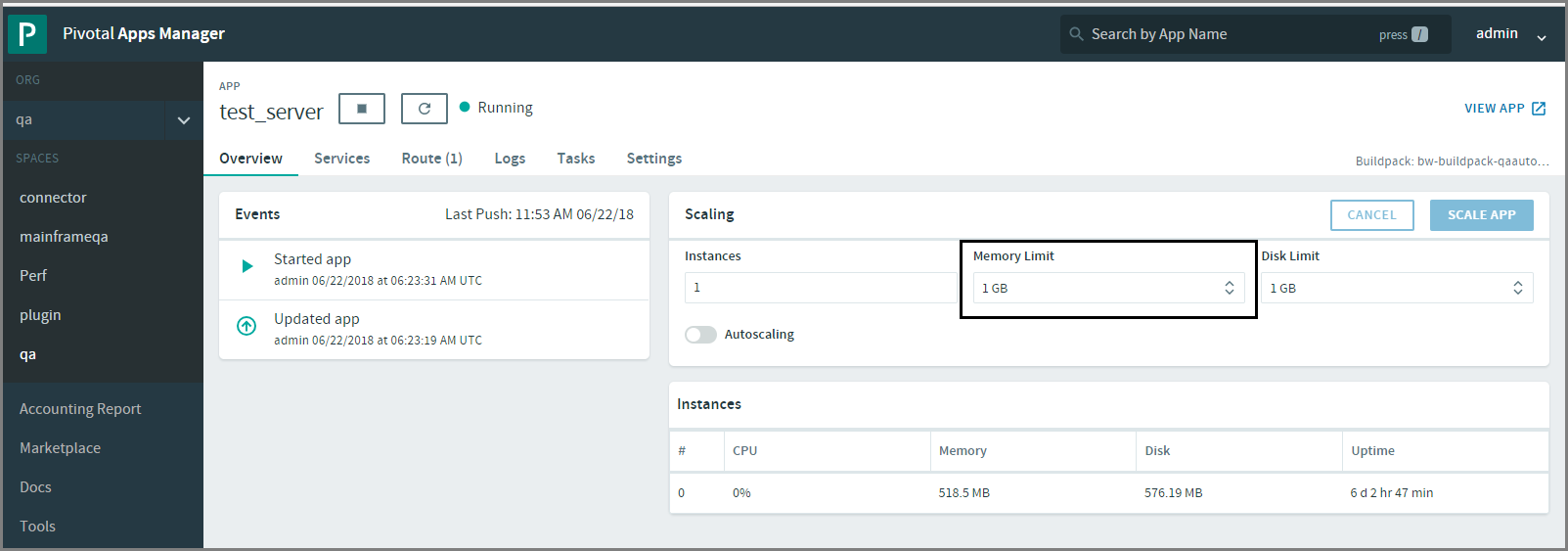

Pivotal Platform:

For cloud foundry you can do the vertical scaling by increasing the container resources i.e. memory and disk. You can do this through cloud foundry command line as well as with cloud foundry UI.

Use cf scale APP -m MEMORY to change the memory limit applied to all instances of your application. MEMORY must be an integer followed by either an M, for megabytes, or G, for gigabytes.

Below snapshot shows how vertical scaling can be done through Pivotal Platform UI.