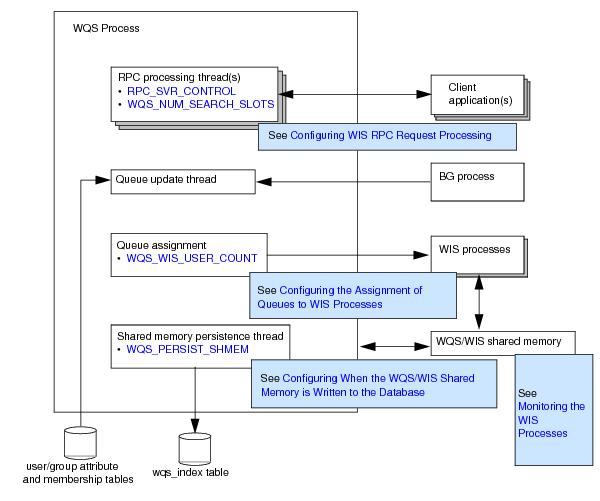

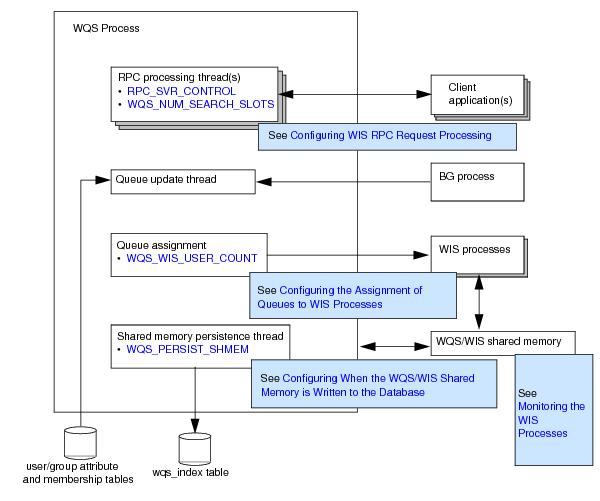

The Work Queue Server (WQS) process handles the listing of work queues. The

WQS process allocates one or more queues to each

WIS process and responds to client RPC requests to access these queues.

The WQS process is multi-threaded, allowing it to perform multiple tasks simultaneously. Different threads are used to:

To process RPC requests, both the WQS and

WIS processes access a pool of “worker” threads that is provided by a multi-threaded RPC server shared library (SWRPCMTS). You can use the

RPC_SVR_CONTROL process attribute to define the number of threads that are available in the SWRPCMTS library to process RPC requests.

You can adjust the value of this process attribute to optimize the WQS and

WIS process’ response times when processing RPC requests against available CPU capacity. Increasing the number of threads will improve the throughput of client RPC requests, but at the cost of increased CPU usage.

When iProcess Engine starts up, the WQS process is responsible for assigning all the work queues to

WIS processes.

By default, queues are assigned to WIS processes dynamically, using either the

round-robin or

on-demand method (as determined by the

WQS_ROUND_ROBIN parameter in the

staffcfg file, which is located in the

SWDIR\etc directory- see

WQS_ROUND_ROBIN on page 49):

|

•

|

Round-robin. This method assigns a work queue to each WIS process alphabetically, cycling round until all work queues are assigned. For example, if a system has 5 WIS processes and 20 work queues A to O then:

|

The round-robin method takes no account of queue size. It is best used when the messages are fairly evenly distributed between the majority of queues and user access is also evenly spread.

|

•

|

On-demand. This method assigns work queues to WIS processes based on cost. All work queues have a weighting (determined by the WQS_QUEUE_WEIGHTING parameter) that determines the cost of the work queue to the WIS process. Queues are assigned to the WIS process with the lowest overall cost. The more work queues that are allocated to a WIS process, the higher the cost of the WIS process so the less new work queues are allocated to it. The cost calculation is as follows:

|

|

−

|

wicount is the number of work items the WIS process is currently processing.

|

|

−

|

qcount is the number of work queues the WIS process is currently processing.

|

The number of items in a work queue is taken from data that has been persisted to the wqs_index database table. If, for example, a new queue has been added to iProcess Engine after it has been started, it means the allocation of the work queues may not reflect the actual count of work items in the work queue. To overcome this, restart iProcess Engine. This results in the work queues being re-allocated according to the latest work item count.

To control how work queues are allocated to WIS processes, you can adjust the

WQS_QUEUE_WEIGHTING parameter. This parameter changes the cost of a work queue to a

WIS process. For example, the larger the value, the more that the number of work queues rather than the number of work items in the work queues determines whether a work queue is allocated to a

WIS process. Therefore, if you have lots of work queues with an even amount of work items in each, you may want to increase the value of the

WQS_QUEUE_WEIGHTING parameter. If you only have a few work queues that contain large amounts of work items, you may want to lower the value.

You can do this by specifying the WQS_WIS_USER_COUNT process attribute for the

WQS process. This attribute defines the number of

WIS processes that should be dedicated to handling user queues and group queues respectively (either as a fixed number or as a percentage of the available processes). See

WQS_WIS_USER_COUNT on page 238 for more information.

If you have certain queues that are very large or very busy, you may find it useful to dedicate specific WIS processes to handling only those queues (leaving the remaining queues to be dynamically assigned to the remaining

WIS processes).

To dedicate a specific WIS process to handling a specific queue:

|

3.

|

To assign a queue to a specific WIS process, assign the WIS instance number that you want the queue to use as the value of the SW_WISINST attribute for that queue. (You can use the swadm show_processes command to list the available WIS instances - see Show Server Processes.)

|

Once a WIS process has been dedicated to handling a specific queue or queues, it will handle only those queues. It is no longer available for dynamic queue allocation.

There is one exception to this: if all the available WIS processes are dedicated to handling specific queues, and a new queue is added, the queues are no longer treated as dedicated. This means that:

|

•

|

the dedicated WIS processes continue to handle their assigned queues (but they may also have to handle the newly assigned queue as well).

|

The WQS/WIS shared memory cache holds summary information about work queues, such as which WIS process is handling a queue, how many work items it contains, how many new items, items with deadlines and so on. This information is constantly updated by the

WQS and

WIS processes.

The shared memory persistence thread wakes up every WQS_PERSIST_SHMEM seconds and writes the contents of the WQS/WIS shared memory to the

wqs_index database table.

When the WIS process starts up, it needs to know how many work items are in each queue that it is handling, so that it can determine whether or not to cache the queue immediately (see

Configuring When WIS Processes Cache Their Queues). The

WIS process can therefore read this information from the

total_items column in the

wqs_index database table.