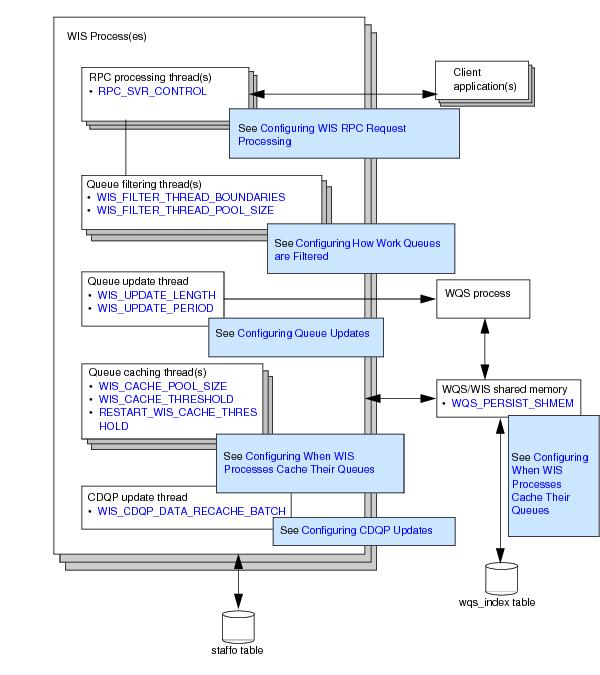

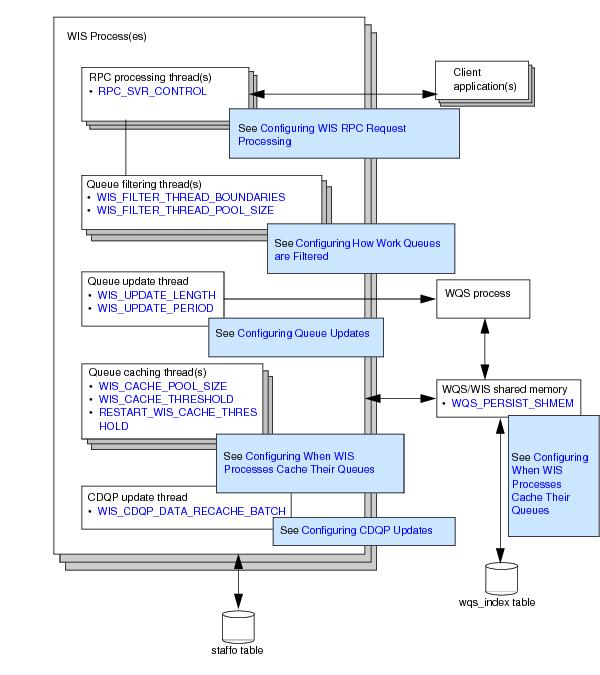

The Work Item Server (WIS) process handles the listing of work items in user and group queues. Each

WIS process is allocated one or more queues to handle by the

WQS process and responds to client RPC requests to process work items held in these queues.

You can use the swadm add_process and

delete_process commands to change the number of

WIS processes on your system according to your requirements. See

Using SWDIR\util\swadm to Administer Server Processes for more information about how to use these commands.

The WIS process is multi-threaded, allowing it to perform multiple tasks simultaneously. Different threads are used to:

|

•

|

cache the information that the WIS process maintains about each work queue that it is handling, allowing the WIS processes to respond quickly to RPC requests from client applications.

|

You can use the SWDIR\util\

plist -w command to monitor the operation of the

WIS processes. TIBCO recommends you do this regularly, particularly in the following circumstances:

The format of the SWDIR\util\

plist -w command is:

|

•

|

V can be used to display additional information (the LastCacheTime and CDQPVer columns)

|

|

•

|

v can be used to display additional information (the Version, NewVers, DelVers, ExpVers, UrgVers, and QParamV columns)

|

|

•

|

WIS is the number of a specific WIS process, and can be used to display details only for that WIS process. If this parameter is omitted, the command displays details for all the WIS processes.

|

Use the plist -w command to view detailed information about the

WIS processes such as the number of items in the queue, whether the queue is disabled, and the number of new items in each

WIS process.

Use the plist -wVv command to view all the additional information that is returned by the

plist -wV and the

plist -wv commands.

The plist -w[V][v] [WIS] command displays the following information.

To process RPC requests, both the WIS and

WQS processes access a pool of “worker” threads that is provided by a multi-threaded RPC server shared library (SWRPCMTS). You can use the

RPC_SVR_NUM_THREADS process attribute to define the number of threads that are available in the SWRPCMTS library to process RPC requests.

You can adjust the value of this process attribute to optimize the WQS and

WIS process’ response times when processing RPC requests against available CPU capacity. Increasing the number of threads will improve the throughput of client RPC requests, but at the cost of increased CPU usage.

By default, the WIS process uses the thread that is processing an RPC request to perform any work queue filtering required by that RPC request. This is perfectly adequate if the queues are small and the filter criteria are simple. However, the time taken to filter a queue can increase significantly as the number of work items in the queue grows and/or the complexity of the filter criteria increases. This can result in a perceptible delay for the user viewing the work queue.

To cope with this situation, the WIS process contains a pool of queue filtering threads that can be used to filter work queues more quickly. The following process attributes allow you to configure how and when these threads are used:

|

•

|

WIS_FILTER_THREAD_BOUNDARIES allows you to define when a work queue should be split into multiple "blocks" of work for filtering purposes. You can define up to 4 threshold values for the number of work items in a queue. As each threshold is passed, an additional block of filtering work is created, which will be handled by the first available queue filtering thread.

|

|

•

|

WIS_FILTER_THREAD_POOL_SIZE allows you to define the number of queue filtering threads in the pool. These threads are used to process all additional filtering blocks generated by the WIS_FILTER_THREAD_BOUNDARIES thresholds. Increasing the number of threads in this pool allows more blocks of filtering work to be processed in parallel, but at the cost of increasing the CPU usage of the WIS process.

|

|

•

|

The WIS process receives 5 RPC requests to filter the queue.

|

|

•

|

It calls the WQS process for a new queue to handle when required (i.e. when the WQS process has processed a MOVESYSINFO event and sent out an SE_WQSQUEUE_ADDED event to the WIS process).

|

The queue update thread performs updates for WIS_UPDATE_LENGTH seconds or until all queues have been processed, at which point it will go to sleep for

WIS_UPDATE_PERIOD seconds. If the thread hasn't gone through all the queues within the WIS_UPDATE_LENGTH time then it will start from the point it finished at on its previous update.

The WQS/WIS processes maintain an in-memory cache of the information that each WIS process contains about each work queue that it is handling. Caching this information allows the

WIS processes to respond quickly to RPC requests from client applications.

However, the amount of time that a WIS process takes to start up is heavily influenced by the number of queues that it has to cache, the number of work items in the queue, the number of CDQPs defined in the queue, and the general load on the machine.

|

|

You can monitor how long a WIS process is taking to start up using the plist -wV command, which is under the SWDIR\util directory (see Monitoring the WIS Processes). The LastCacheTime column shows the number of milliseconds that the WIS process took to cache each queue when it was last cached.

|

|

•

|

when they are first handled by a WIS process. This will be either when iProcess Engine starts up, or for queues that are added when the system is running, after a MoveSysInfo event request.

|

You control which queues are cached when they are first handled by a WIS process by using a combination of the

WISCACHE queue attribute and the

WIS_CACHE_THRESHOLD or

RESTART_WIS_CACHE_THRESHOLD process attributes. When the

WIS process first handles a queue, it checks the value of the queue’s

WISCACHE attribute:

|

•

|

If WISCACHE is set to YES, the WIS process caches the queue (irrespective of how many work items the queue contains).

|

|

•

|

If WISCACHE has not been created, or has not been set, the WIS process only caches the queue if the queue contains a number of work items that equal or exceed the value of the WIS_CACHE_THRESHOLD or RESTART_WIS_CACHE_THRESHOLD process attributes.

|

|

|

When the WIS process starts up, it reads the number of work items in each work queue from the total_items column in the wqs_index database table. This table is populated from the contents of the WQS/WIS shared memory, which is written to the database every WQS_PERSIST_SHMEM seconds.

|

|

•

|

The WISMBD process also makes RPC calls to WIS processes to pass instructions from the BG processes. If the WISMBD process receives an ER_CACHING error from the WIS process it retries the connection a number of times. If the attempt still fails, it requeues the message and writes a message (with ID 1984) to the sw_warn file, which is located in the SWDIR\logs directory.

|

See TIBCO iProcess Engine System Messages Guide for more information about this message.

The WISCACHE queue attribute does not exist by default. If you wish to use it, you must first create it and then assign a value for it to any queues that you want to use it. To do this:

|

3.

|

Assign a value of YES to WISCACHE for each queue that you want to be cached when the WIS process first handles it (irrespective of how many work items the queue contains).

|

All other queues (for which WISCACHE is not set) will be cached either when the

WIS process first handles it or when they are first accessed by a client application, depending on the value of the

WIS_CACHE_THRESHOLD process attribute.

When the WIS process starts up it caches all the CDQP definitions that are used by the queues it is handling, and uses the cached values when displaying CDQPs in its work queues.

|

|

The WIS process obtains the field values of fields that are defined as CDQPs from the pack_data database table.

|

You can change existing CDQP definitions or create new ones by using the swutil QINFO command. By default, you then have to restart iProcess Engine to allow the

WIS process to pick up the changed definitions and update its work queues with them.

However, you can dynamically pick up changes to CDQP definitions without having to restart iProcess Engine, by using the PUBLISH parameter with the

QINFO command. This publishes an event that signals that updated CDQP definitions are available.

When the WIS process detects this event its CDQP update thread wakes up and updates the CDQP definitions for all work items in its queues. Work items are updated in batches, the size of which is determined by the value of the

WIS_CDQP_DATA_RECACHE_BATCH process attribute.

See "Case Data Queue Parameters" in TIBCO iProcess swutil and swbatch Reference Guide for more information about CDQPs and the

QINFO command.