Creating a Docker Swarm Cluster in AWS

This procedure describes how to create a Docker Swarm cluster using the CloudFormation template provided by Docker.

- Log into the AWS console.

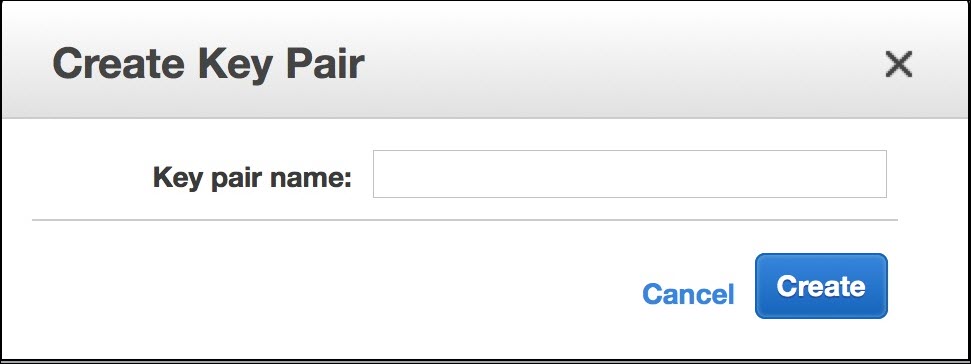

- Create an SSH key pair in the AWS portal and save the

*.pem locally:

SSH key pairs are used to log into Docker manager node. You can also use any existing AWS key pair.

For more information, see https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-key-pairs.html#having-ec2-create-your-key-pair.

- Go to the Docker for AWS setup & prerequisites website. and click the Deploy Docker Community Edition (CE) for AWS (stable) button.

- In the

Docker Community Edition (CE) for AWS section, click:

After logging into AWS, dialog shown in the next step is displayed.

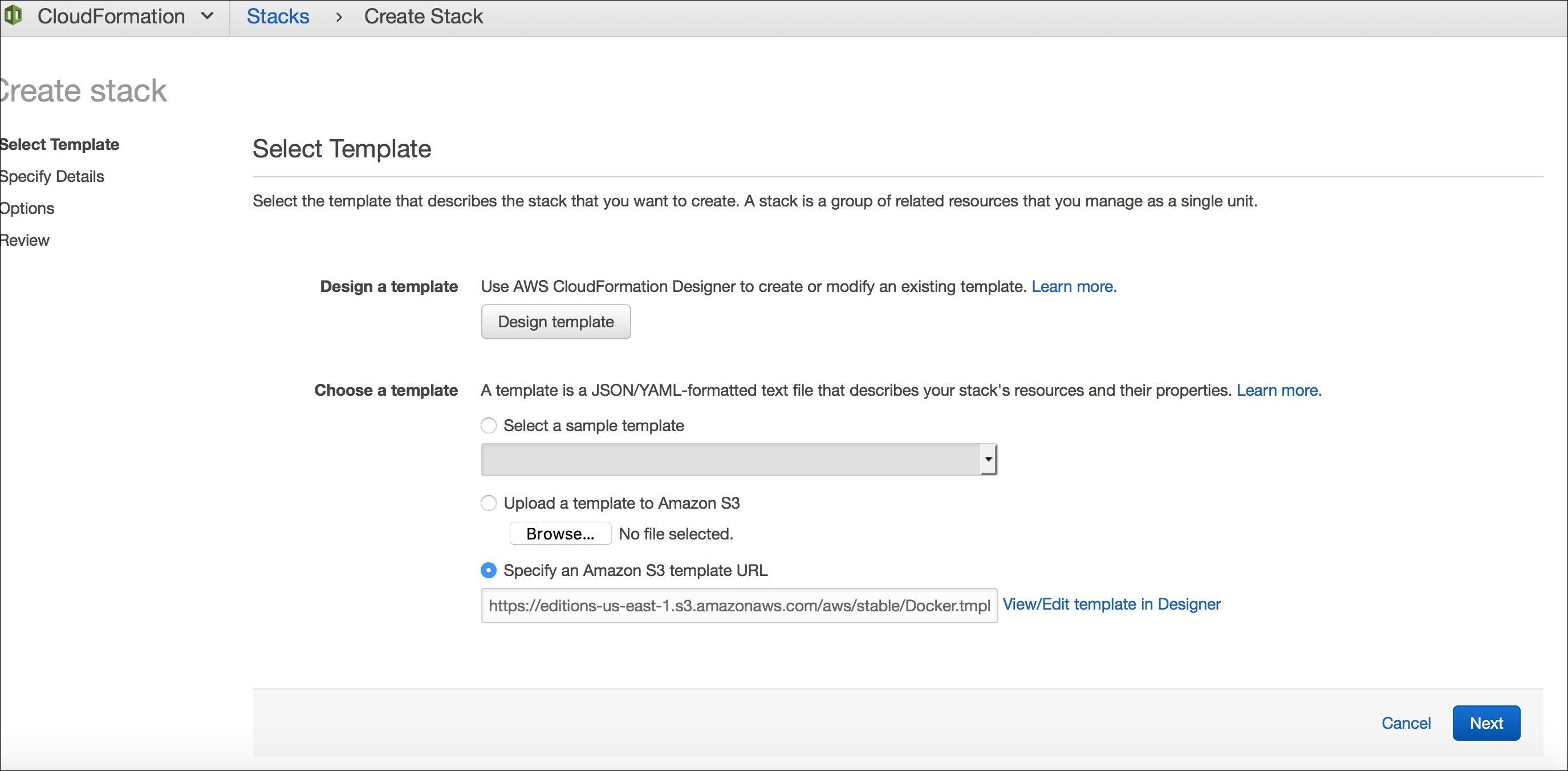

- Create a Stack by selecting the third option (Specify an Amazon S3 template URL), then click

Next.

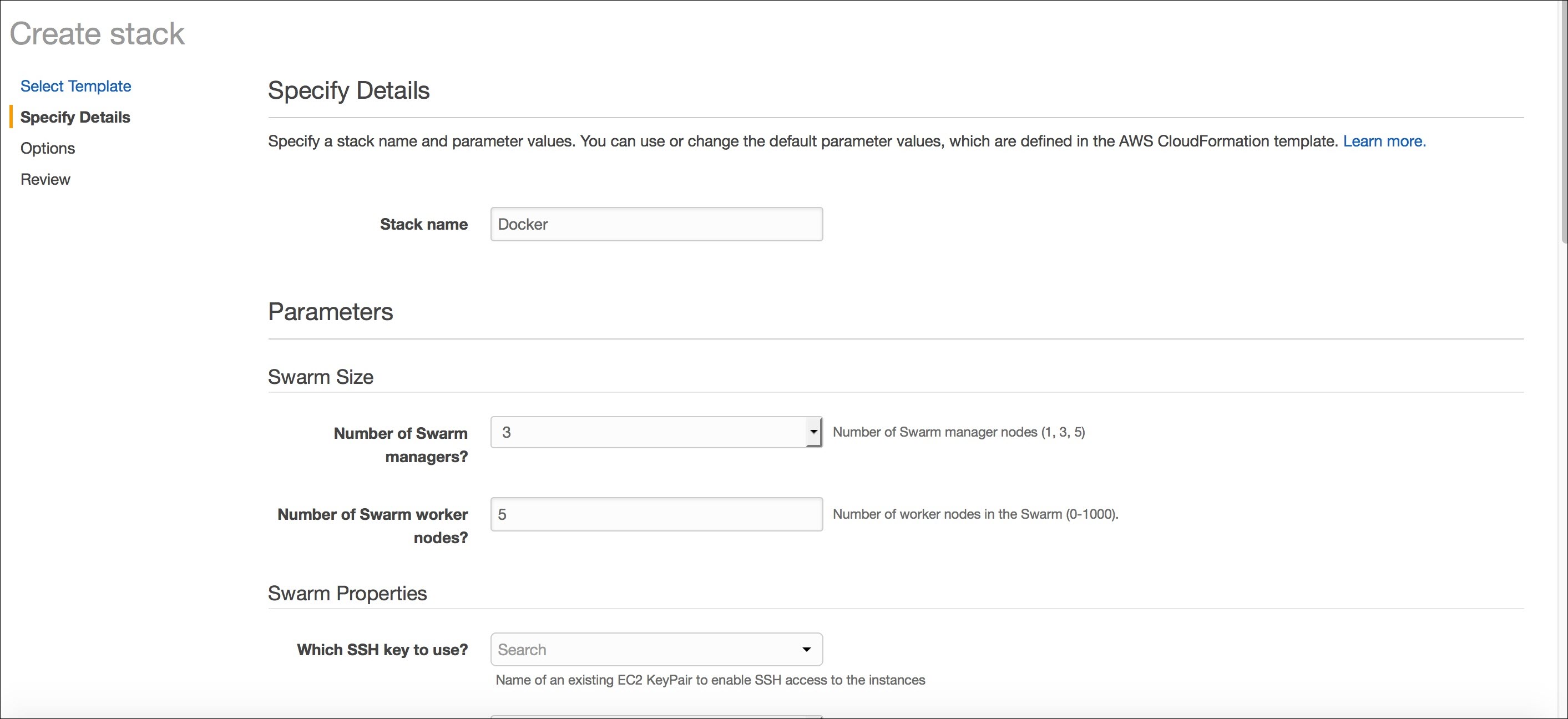

- Specify the stack name, number of swarm managers, worker nodes, instance type, and so on.

Use the key pair name that was created in step 1 for 'Which SSH key to use".

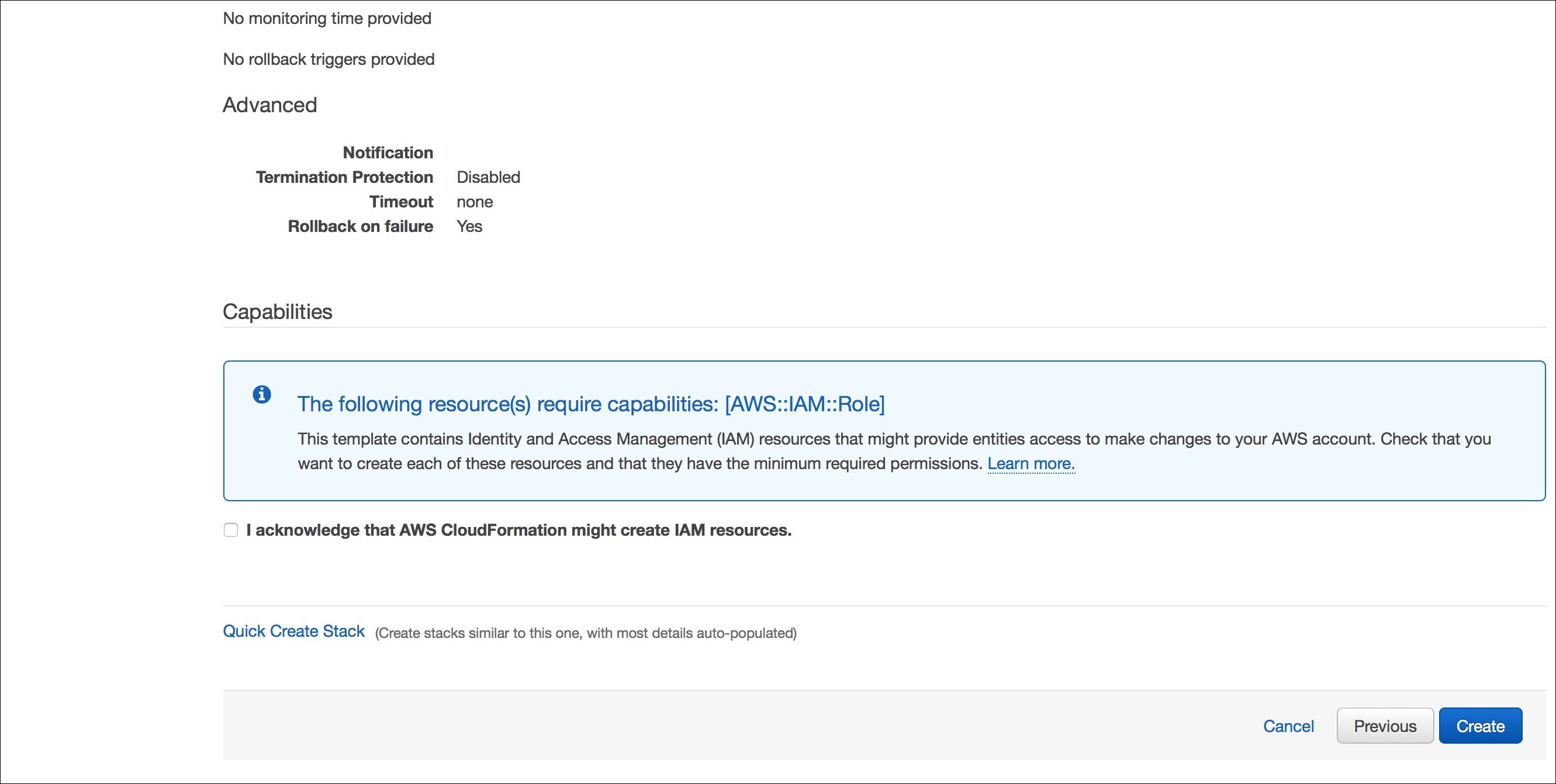

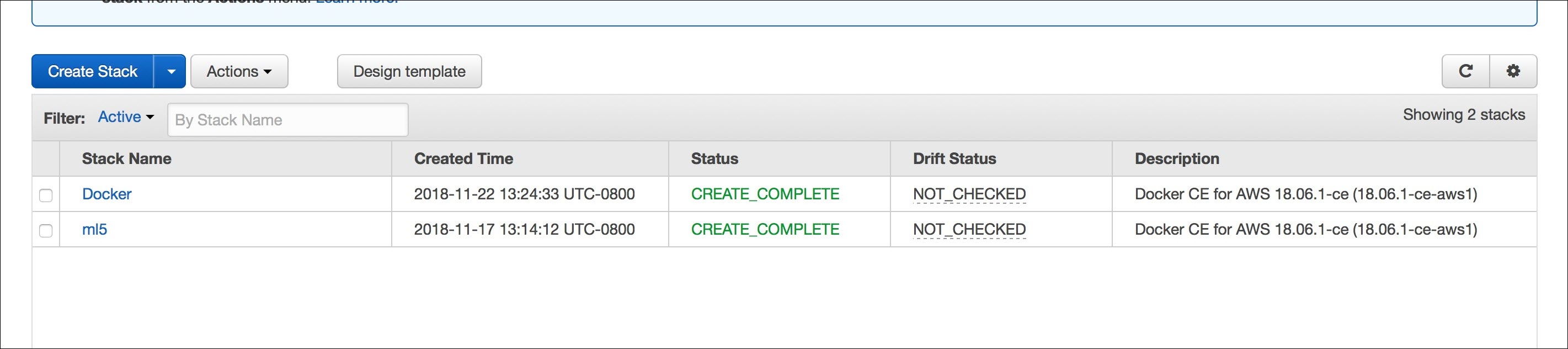

- Accept the acknowledgment, then click Next to create the cluster. When the cluster is created, you will see a screen similar to this:

- Find the IP address of the Docker manager Node from your AWS Cluster Dashboard and ssh to it.

ssh -A docker@<manager_node_IP>

- Copy

docker-deploy.tgz (which was downloaded in step 2 in the

Customizing the Mashery Local Deployment Manifest) into the Manager node:

scp docker-deploy.tgz docker@<manager_node_IP>:/home/docker

- Untar the

docker-deploy folder.

~ $ cd /home/docker/ ~ $ ls -l total 164 -rw-r--r-- 1 docker docker 164211 Sep 12 17:07 docker-deploy.tgz ~ $ tar -xzvf docker-deploy.tgz

- Create a dockerLogin file.

- Add the Docker login command generated in step 3 of Customizing the Mashery Local Deployment Manifest.

- Change the access permissions as follows:

chmod 755 dockerlogin.sh

- Execute the file from inside Docker Manager.

~/docker-deploy/aws/swarm $ ./dockerlogin.sh WARNING! Using --password via the CLI is insecure. Use --password-stdin. WARNING! Your password will be stored unencrypted in /home/docker/.docker/config.json. Configure a credential helper to remove this warning. See https://docs.docker.com/engine/reference/commandline/login/#credentials-store Login Succeeded

Upon successful login, the ~/.docker/config.json Docker configuration file is also created.

- List the docker nodes.

$ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION f9wmpa5657ayarpyj6tcykxzo ip-172-31-2-219.us-east-2.compute.internal Ready Active 18.09.2 axydf7bg383f4nfz1syjiavvk ip-172-31-10-185.us-east-2.compute.internal Ready Active Reachable 18.09.2 q54yvmfmz4v7sd5ps3azgo2sq ip-172-31-16-174.us-east-2.compute.internal Ready Active 18.09.2 wuexbeu4otat81jm3v2o75x86 ip-172-31-18-54.us-east-2.compute.internal Ready Active 18.09.2 14mw5sbkfbi94zdv3pjflemfx ip-172-31-21-236.us-east-2.compute.internal Ready Active Reachable 18.09.2 p9r9mya9f29yg08ifpg5ab9ut * ip-172-31-32-254.us-east-2.compute.internal Ready Active Leader 18.09.2 fdkjxs3eeau2czyarbp7yj8mf ip-172-31-33-4.us-east-2.compute.internal Ready Active 18.09.2 letp6ywkcnxf68yc92j7dbbze ip-172-31-40-182.us-east-2.compute.internal Ready Active 18.09.2

- From the available Docker nodes, choose the hostnames where you want the stateful set deployed, then update the hostname locations in the stateful set yml files.

~/docker-deploy/aws/swarm/manifest-aws-swarm $ vi tmgc-nosql.yml ~/docker-deploy/aws/swarm/manifest-aws-swarm $ vi tmgc-sql.yml ~/docker-deploy/aws/swarm/manifest-aws-swarm $ vi tmgc-cache.yml ~/docker-deploy/aws/swarm/manifest-aws-swarm $ vi tmgc-log.yml

For example:

node.hostname == ip-172-31-2-219.us-east-2.compute.internal in tmgc-log.yml

tmgc-log.yml version: "3.3" services: log: image: <docker-registry-host>/tml/v5.2.0.1:tml-log-v5.2.0.1 deploy: replicas: 1 restart_policy: condition: on-failure placement: constraints: - node.hostname == ip-172-31-2-219.us-east-2.compute.internal env_file: - tmgc-log.env secrets: - source: log_config target: /opt/mashery/containeragent/resources/properties/tml_log_properties.json - source: cluster-property-vol target: /opt/mashery/containeragent/resources/properties/tml_cluster_properties.json - source: zones-property-vol target: /opt/mashery/containeragent/resources/properties/tml_zones_properties.json volumes: - logvol:/mnt networks: - ml5 volumes: logvol: external: name: '{{.Service.Name}}-{{.Task.Slot}}-vol' secrets: log_config: file: ./tml_log_properties.json cluster-property-vol: file: ./tml_cluster_properties.json zones-property-vol: file: ./tml_zones_properties.json networks: ml5: external: true - If the Swarm cluster is created successfully, create an Overlay network "ml5" for the containers networking by executing the following on the manager node.

docker network create -d overlay --attachable ml5

Copyright © Cloud Software Group, Inc. All rights reserved.