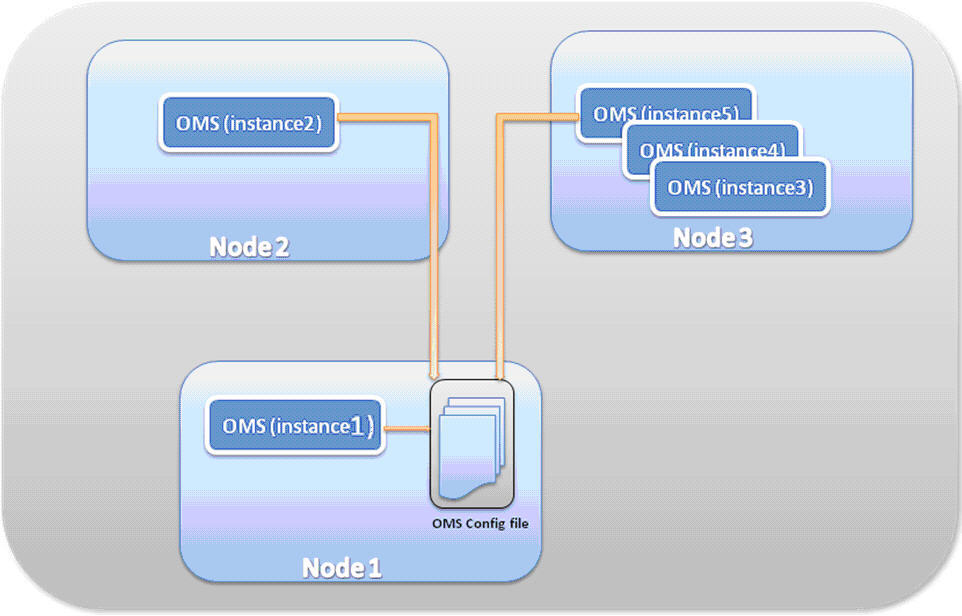

Multi-Node Multi-Instance Topology

The multi-node multi-instance, or horizontal scaling deployment topology, can be achieved by running multiple instances of microservices on multiple nodes (machines). This is mainly done to increase the overall processing capacity of the TIBCO Order Management - Long Running server-side components namely omsServer and jeoms. The default microservice instance is duplicated on the main and other nodes to run multiple instances.

Once a node is configured, other nodes download the configuration from the database. However, this feature is only available if the database configuration is selected; it is selected by default. If disabled, the configuration has to be manually copied.

The following steps help to use this deployment topology.

Prerequisites

- The setup for Single-node Multi-instance deployment topology is already in place and working on the main node on which TIBCO Order Management - Long Running is installed.

- All the required underlying software such as Java and the libraries such as Oracle JDBC driver, Enterprise Message Service libraries, which are being referred to in the microservices are already available on the additional nodes. All the corresponding environment variables are set and exported.

Creating New Cluster Members

- Access TIBCO Order Management - Long Running Configurator GUI application in a supported browser through the HTTP interface of the default Configurator microservice instance on the main node using the URL http://<HOST>:<PORT>.

-

Create additional members to be run on additional nodes by cloning the existing members. The easiest way is to create one clone of each existing member to have one new member and change the member name. As the new member needs to be run on a different node, the ports can be kept as-is.

For example, assume that there are 10 existing members namely Member1, Member2...Member10 in Single-node Single-instance topology. Clone member Member1 and rename the cloned member to create Member11.

- The port numbers need not be changed for Member11 in Configurator. As it runs on different node, the ports, although same, does not conflict with Member1. Follow the same procedure for the remaining instances.

-

After the required number of additional members to be run on other nodes is created in the configuration files, other nodes download the configuration from the database if the database configuration is selected.

-

Make as many copies of the services ($OM_HOME/roles/omsServer, or $OM_HOME/roles/ope, or $OM_HOME/roles/aopd, and so on) needed to configure the number of instances at any location, mention the admin database details in the configDBrepo.properties file, and change the port number accordingly.

- Set the $OM_CONFIG_HOME environment variable to point to the directory path of the copied config directory OM_HOME/roles/configurator/standalone/config. This step is only required for the configurator service. The other services do not need this variable set.

-

Adding Cluster Members to the Database

The entries for additional members to be run on other nodes must add into DOMAINMEMBERS table in the same way as explained in Single-node Multi-instance topology earlier.

Creating Additional microservice Instances

- Create additional microservice instances on other nodes by copying the existing microservice directories from the main node.

The easiest way is to copy each directory and just change the member’s suffix number in the directory name.

- The port numbers in $OM_HOME/roles/omsServer/standalone/config/application.properties do not have to be changed for Member1. As it runs on a different node, the ports, although same, do not conflict with Member1. Follow the same procedure for the remaining instances.

- In case of static allocation of member IDs to nodes, set the system properties NODE_ID and DOMAIN_ID. If a dynamic allocation is required, it is not required to set these variables.

Sanity Test

The sanity test for Multi-node Multi-instance topology is exactly the same as explained in Single-node Multi-instance topology. Here the additional number of Orchestrator instances running on other nodes joins the ORCH-DOMAIN cluster. Anyone instance among all act as the Cluster Manager and all others act as Workers.