Handling Bad Data in Hadoop

Before processing each line of data in a MapReduce job, Team Studio first checks to see if the data is "clean".

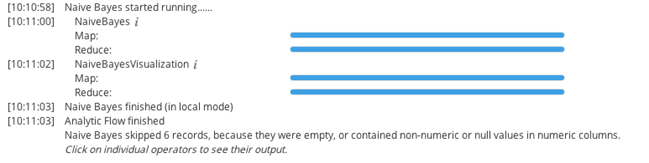

A row is considered clean if, for each column being used for this operator, the corresponding value in the row is of the correct data type. If a row is dirty, it is filtered out. At the end of a run, the console indicates how many rows were filtered out due to invalid data.

In general, all models filter out bad data as described above. However, predictors do not filter out the bad data. Instead, predictors include the row but do not make a prediction in this case.

Related concepts

Copyright © Cloud Software Group, Inc. All rights reserved.