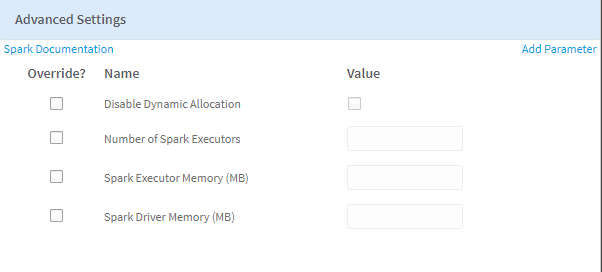

Advanced Settings Dialog Box

When Spark is enabled for an operator, you can apply the Automatic configuration for the Spark parameters, setting the default values to run the operator. However, you can edit these parameters directly.

To edit these parameters directly in the operator parameter dialog box, select No for Advanced Settings Automatic Optimization, and then click Edit Settings. Set your desired configuration in the resulting Advanced Settings dialog box.

Note: Available options are determined by the type of operator. The following table shows the settings that apply for all operators for which you can enable Spark. For information about additional settings, see the specific operator help.

- If you check a check box from the Override? column, you can specify a value for the corresponding setting, which supersedes any default value set by your cluster or workflow variables. If you provide no alternative value, the default value is used.

- If you click Add Parameter, you can provide custom Spark parameters. This option provides more control and tuning on your Spark jobs. See Spark Autotuning for more information.

Related reference

Copyright © Cloud Software Group, Inc. All rights reserved.