Connecting TIBCO Data Science – Team Studio to Spark Cluster

A Spark cluster is a MapReduce-like cluster computing framework designed for low-latency iterative jobs and interactive use from an interpreter. It provides clean, language-integrated APIs in Scala, Java, Python, and R along with an optimized engine that supports general execution graphs. Additionally, a wide range of advanced tools is supported, such as Spark SQL for processing SQL and structured data, pandas API on Spark for pandas workloads, MLlib for machine learning, GraphX for processing graphs, and Structured Streaming for incremental computation and stream processing.

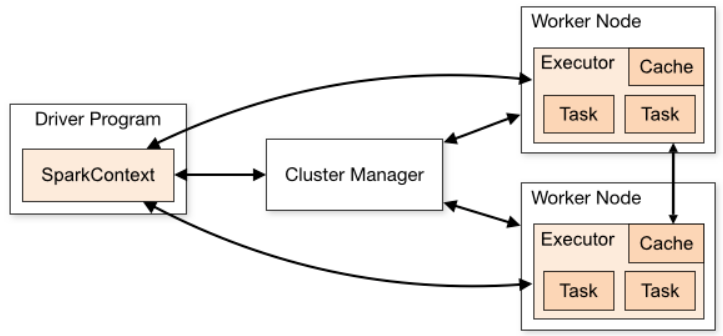

A Spark cluster is a combination of a Driver Program, Cluster Manager, and Worker Nodes that work together to complete tasks. You can coordinate processes throughout the cluster using SparkContext. The SparkContext can connect to various cluster managers that distribute resources among applications. Once connected, Spark acquires executors on nodes in the cluster, which are processes that run computations and store data for your application. The executors then receive your application code (specified by JAR or Python files supplied to SparkContext), and then SparkContext sends tasks to the executors for execution.

The following figure helps to visualize the Spark cluster:

TIBCO Data Science – Team Studio 7.0.0 supports Spark cluster version 3.2 that can only be used with Apache Spark 3.2 workflows. These workflows consist of modern operators which use Spark SQL for data processing.

The file storage system lets you store large data sets across an infinite number of servers, rather than storing all the data sets on a single server. The file storage can either be a file system associated with the Spark cluster (local) or outside of the Spark cluster (remote). Apache Spark 3.2 cluster doesn't have a dedicated file storage system. In either case, they can only use TIBCO® Data Virtualization as a file storage system.

When a file or data is stored outside of the Spark cluster, then the data is copied to the Spark cluster so that Spark can perform the analytic processing. Whereas, when a file or data is stored in a database connected to TIBCO® DV, the data is already available to the Spark cluster so that Spark can perform the analytic processing. In this case, since there is no copy, transfer, or movement of any data, the performance is optimized.

Spark Cluster Manager

TIBCO Data Science – Team Studio can be connected to several different Spark systems using different Spark cluster managers. The following Spark cluster manager is supported in the 7.0.0 version of TIBCO Data Science – Team Studio:

There are three possible configurations when using Spark standalone cluster manager:

-

When TIBCO Data Science – Team Studio, Spark standalone cluster, and TIBCO® DV are running on the same server. This is the simplest configuration, but since they are sharing the same resources, it is not recommended for big data environments.

-

When TIBCO Data Science – Team Studio and Spark standalone cluster are on the same server, but TIBCO® DV is on a different server. In this case, a network file system is shared between the two servers and mounted at the same mount point.

-

When TIBCO Data Science – Team Studio, Spark standalone cluster, and TIBCO® DV are on different servers. In this case, the same network file system must be mounted on the TIBCO® DV and Spark cluster servers.

A YARN cluster manager is used by the Cloudera CDH / CDF and Amazon EMR. These are existing clusters that have an associated file system (HDFS in the case of Cloudera and S3 in the case of EMR). These file systems are the shared file system and TIBCO® DV is connected to this file system.