This topic describes how to build a StreamSQL application in StreamBase Studio by recreating the Market Feed Monitor application available in the StreamBase Studio SB Demos perspective. If you load this example from the SB Demos perspective, it creates a project named demo_Market Feed Monitor in the Package Explorer within the SB Authoring perspective. From the SB Test/Debug perspective, either the EventFlow or StreamSQL version of the application can be run.

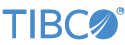

Before developing the StreamSQL application, let's review the data processing steps that must be performed. The EventFlow version of the application, shown in the following figure, provides a straightforward approach to viewing the entire application in a single glance. It's a good idea to run the provided Market Feed Monitor demo before working through the remainder of this tutorial.

Input to the application is provided through an input stream named TicksIn and the schema associated with the incoming tuples includes

five fields:

-

Symbol, a string field of maximum length 25 characters that contains the symbol for a stock being traded;

-

SourceTimestamp, a timestamp field containing the time at which the tuple was generated by the source application;

-

BidPrice, a double field containing the price currently being offered by buyers of this stock;

-

AskPrice, a double field containing the price currently being sought by sellers of this stock; and

-

FeedName, a string field of maximum length 4 that contains the name of the stock feed service that submitted this tuple.

To duplicate this step in a StreamSQL application, use the CREATE INPUT STREAM statement to define an input stream and its schema.

Immediately after receiving each tuple, the EventFlow application uses a Map operator

named LocalTime to add a timestamp field to the stream. This field contains the

system time on the computer running the application. To duplicate this step in a

StreamSQL application, use the CREATE STREAM statement to define a named stream with

an additional field. For consistency with the EventFlow application, name this

additional field LocalTime. The StreamBase function

now() can be used to obtain the system time. A named

stream is only accessible by other statements within this StreamSQL application and

cannot, therefore, be accessed by network client applications such as applications

that submit and retrieve tuples from Input and output streams.

Next, a field-based Aggregate operator, TicksPerSecond,

executes two aggregate functions over a one second interval. The first function

obtains the system time at the beginning of the one second interval, while the second

function determines the number of tuples that passed through the operator during the

one second interval. Separate calculations are performed for each stock feed service.

The output stream from this operator includes three fields, but only one of these,

FeedName, is derived from the input stream. The other

two fields are derived from the aggregate functions executed by this operator. Since

the other fields in the original input stream are not used by the application, it

would have been slightly more efficient to use the preceding Map operator to drop

these fields from the stream and not pass them to this Aggregate operator. To

duplicate this step in a StreamSQL application, employ a CREATE WINDOW statement, a

SELECT statement, and a CREATE STREAM statement. However, it is easy to combine these

statements, which offers a simplification.

After calculating the number of tuples submitted during each one second interval, use

another field-based Aggregate operator, Mean20s, to calculate some stream statistics

over a twenty second interval. The output stream from this operator includes the

FeedName field and four fields derived from aggregate

functions executed by this operator. To duplicate this step in a StreamSQL

application, you again need to employ a CREATE WINDOW statement, a SELECT statement,

and a CREATE STREAM statement.

Finally, output tuples from the Mean20s Aggregate operator are sent to both the

TickStats output stream, where they are available to

external client applications, and to the Map and Filter operators, SetThreshold and TickFallOffFilter,

which generate an alert that is available at the TickFallOffAlert output stream. Within a StreamSQL application, a

CREATE OUTPUT STREAM statement duplicates the EventFlow application's TickStats output stream. There are several ways to replicate the

functionality of the Map and Filter operators and the alternative output stream.

Perhaps the most elegant approach is to use a stream valued expression (also called a

subquery) and the arrow operator to pass results between subqueries.

Start StreamBase Studio. See the Studio Reference Guide for instructions on using Studio and switching between perspectives.

In StreamBase Studio, go to the SB Demos perspective

-

Switch to the SB Demos perspective.

-

In the Select a demo drop-down list, select Financial - Market Feed Monitor.

-

Click .

-

Instead of completing the demo, switch to the SB Authoring perspective for the next steps.

Add a StreamSQL file to the demo project folder.

-

Right-click the project folder demo_Financial - Market Feed Monitor

-

Select → .

-

In the New StreamBase StreamSQL dialog, enter a unique file name, such as

tutorial_StreamSQL -

Click .

StreamBase Studio creates an empty file named tutorial_StreamSQL.ssql in the demo project folder and opens it the

StreamSQL editor.

When you open a StreamSQL file in StreamBase Studio, the canvas becomes an intelligent text editor into which you enter your StreamSQL statements. The editor provides syntax checking, pop-up help, and content assistance, which is a pop-up listing of entries that are valid at the current point in the file. As you enter content, syntax errors are described in the Typecheck Errors view. With each statement, the syntax checker flags the statement until it is complete, so use these warnings as a guide to completing the statement. You can review the full syntax for each statement in the StreamSQL Guide.

Now enter the content into the StreamSQL file you just created, as shown in the following sections. A completed version of the StreamSQL file is included below.

In a single StreamSQL statement, declare the input stream and its associated tuple schema. You must define a schema that corresponds to the content of the tuples that will be submitted to this stream. The tuple's structure is defined by the developer of the client application that interacts with a StreamBase application.

CREATE INPUT STREAM TicksIn (

Symbol string,

SourceTimestamp timestamp,

BidPrice double,

AskPrice double,

FeedName string

);

Create an intermediate stream that adds a field to contain the local time. This parallels the Map operator in the EventFlow version of this application.

CREATE STREAM TicksWithTime AS

SELECT *, now() AS LocalTime FROM TicksIn;

Create an aggregate stream that parallels the Aggregate operator in the EvenfFlow

version as a one-second window over the LocalTime

field.

CREATE STREAM TicksPerSecond AS

SELECT openval() AS StartOfTimeSlice, count() AS

NumberTicks,FeedName

FROM TicksWithTime [SIZE 1 ON LocalTime PARTITION BY FeedName]

GROUP BY FeedName;

This block combines creating an output stream and using an aggregate into one

statement. Like the Mean20s Aggregate operator in the EventFlow version, this block

makes a 20-second overlapping window on the StartOfTimeSlice grouped by FeedName.

CREATE OUTPUT STREAM TickStats AS

SELECT openval() AS StartOfTimeSlice,

avg(NumberTicks) AS AvgTicksPerSecond,

stdev(NumberTicks) AS StdevTicksPerSecond,

lastval(NumberTicks) AS LastTicksPerSecond,

FeedName

FROM TicksPerSecond [SIZE 20 ADVANCE 1 ON StartOfTimeSlice

PARTITION BY FeedName]

GROUP BY FeedName;

This statement adds the AlertThreshold field to the

stream. This is parallel to adding a field using a Map operator in EventFlow.

CREATE STREAM SetThreshold AS

SELECT *,.75 AS AlertThreshold FROM TickStats;

Finally, combine a filter with creating an output stream, using a WHERE clause to find ticks that are less than a certain threshold.

CREATE OUTPUT STREAM TickFallOffAlert AS

SELECT * FROM SetThreshold

WHERE LastTicksPerSecond < AvgTicksPerSecond * AlertThreshold;

The following combines the steps above into a single StreamSQL application.

CREATE INPUT STREAM TicksIn (

Symbol string,

SourceTimestamp timestamp,

BidPrice double,

AskPrice double,

FeedName string

);

CREATE STREAM TicksWithTime AS

SELECT *, now() AS LocalTime FROM TicksIn;

CREATE STREAM TicksPerSecond AS

SELECT openval() AS StartOfTimeSlice, count() AS

NumberTicks,FeedName

FROM TicksWithTime [SIZE 1 ON LocalTime PARTITION BY FeedName]

GROUP BY FeedName;

CREATE OUTPUT STREAM TickStats AS

SELECT openval() AS StartOfTimeSlice,

avg(NumberTicks) AS AvgTicksPerSecond,

stdev(NumberTicks) AS StdevTicksPerSecond,

lastval(NumberTicks) AS LastTicksPerSecond,

FeedName

FROM TicksPerSecond [SIZE 20 ADVANCE 1 ON StartOfTimeSlice

PARTITION BY FeedName]

GROUP BY FeedName;

CREATE STREAM SetThreshold AS

SELECT *,.75 AS AlertThreshold FROM TickStats;

CREATE OUTPUT STREAM TickFallOffAlert AS

SELECT * FROM SetThreshold

WHERE LastTicksPerSecond < AvgTicksPerSecond * AlertThreshold;

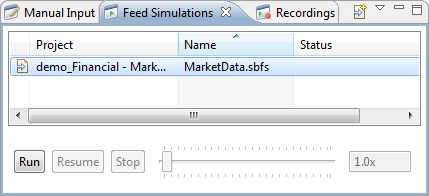

Running the application depends on the ability to rapidly submit a large number of tuples to the input stream. This makes it impractical to test this application using Studio's Manual Input view, so we use a feed simulation and a data file. We will use StreamBase Studio to generate the feed simulation file, and we will borrow an existing CSV test data file from the Market Feed Monitor demo.

The Financial - Market Feed Monitor project contains

a feed simulation, MarketData.sbsf, which reads the

data file marketfeed.csv. You can use the feed

simulation to run your .ssql file. If you want to see how the feed simulation uses

the data file, you can:

-

Double-click

MarketData.sbfsin the Package Explorer to open it in the Feed Simulation editor. -

Notice the TicksIn schema in the Simulation Streams section. Click the triangle widget next to it to see the fields that the feed simulation generates on that stream.

-

Scroll down to the Generation Method for TicksIn section. You will notice that the selected method is

-

Click underneath to open the dialog.

-

At the top, the Data File is set to

marketfeed.csvin the project folder. Notice the records, shown both in the preview and formatted in the pane below that. The first record is:IBM,2006-04-31 10:18:23.0347,2006-04-31 10:18:23.0247,81.37,2006-04-31 10:18:23.0197,100,100,1,0,NYSE

Fields 1 (stock symbol), 3 (timestamp), 4 (bid or ask price) and 10 (feed name) correspond to the fields required by the input stream. Since each data file record includes unneeded fields, the feed simulation must select only the required fields. The fields used are indicated in the Column mapping pane.

-

When you are finished inspecting the feed simulation file, click .

In the SB Authoring perspective, make sure the tutorial_StreamSQL.ssql editor session is selected and active,

then click the ![]() button. This opens the SB

Test/Debug perspective and starts the application.

button. This opens the SB

Test/Debug perspective and starts the application.

In the Feed Simulations view, highlight the feed simulation file and click .

Monitor the input and output tuples in the Application Input and Application Output views. Note that tuples appear on both output streams.

When done, press F9 or click the

![]() Stop Running Application button.

Stop Running Application button.