Distributed Deployment Architecture

The distributed deployment architecture describes the deployment of multiple instances of the Core Engines and other components of gateway.

TIBCO API Exchange Gateway supports a distributed deployment environment, in which multiple instances of the Core Engines can be deployed. This architecture meets the requirements of high availability and scalability of the gateway components, which is recommended in a production environment.

Scaling and High Availability

TIBCO API Exchange Gateway provides a default site topology file, which is configured for a deployment with single instances for each Core Engine of the gateway cluster, all deployed on a single server host. Using this configuration you can quickly deploy the API Exchange Gateway in a development environment, though it typically does not meet availability and scalability requirements for a production deployment. See High Availability Deployment Of Runtime Components.

The Studio can be used to create production site topology configurations for your production environment including load balanced and fault-tolerant setups.

Load Balancing

TIBCO API Exchange Gateway can be rapidly scaled up and down through the addition or removal of additional instances of the Core Engines to the gateway cluster.

When multiple Core Engine instances are deployed in a gateway cluster, the key management functions including throttle management, cache management, cache clearing management, and Central Logger are coordinated across all the Core Engine instances. The components that provide the management functions do not need to be scaled to support the higher transaction volumes.

However, as transaction levels increase, it is likely that this is accompanied by a corresponding increase in management activity. To avoid the possible impact of the management activity on the Core Engines, these management components and the TIBCO Spotfire Servers should be moved onto separate servers.

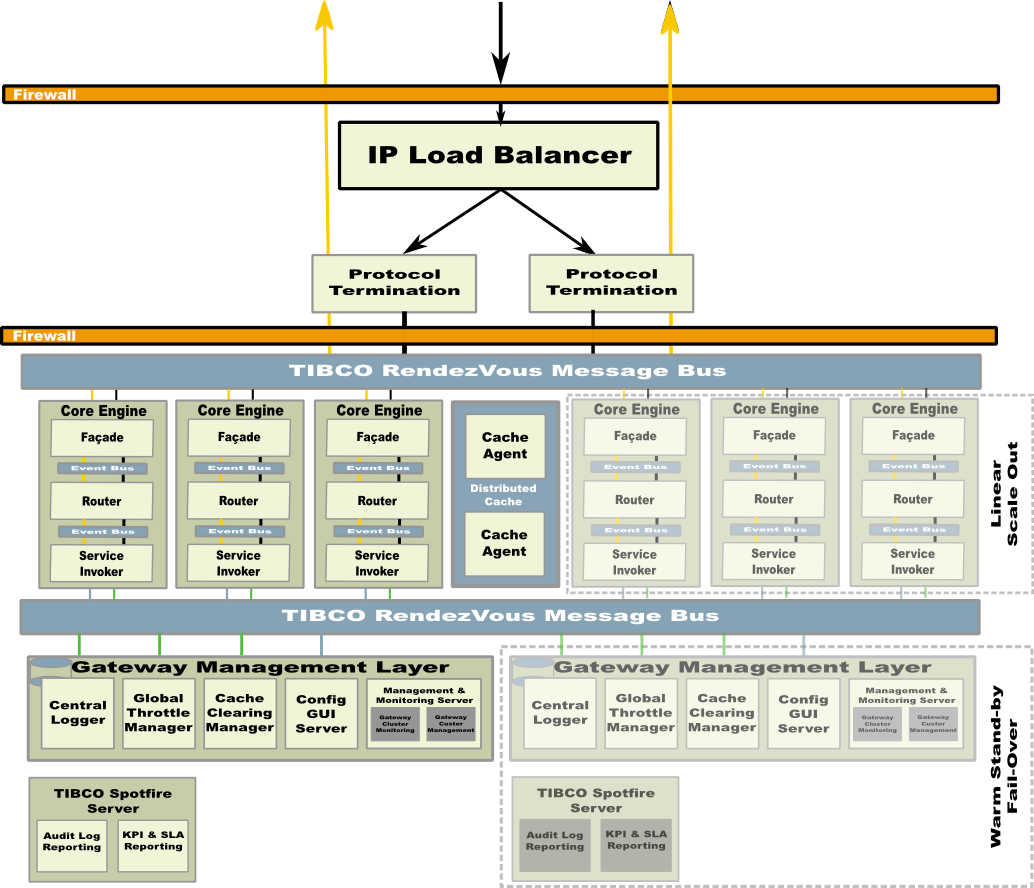

The following diagram illustrates a simplified view of the scaled solution and depicts the deployment of various components in a distributed environment:

Increasing the number of the Core Engines in TIBCO API Exchange Gateway deployment provides a near linear increase in the maximum number of transactions that can be managed. This type of deployment reduces the impact of the failure of an individual Core Engine. TIBCO API Exchange Gateway uses a shared nothing model between the active Core Engines to ensure that there is no shared state.

To support a load balanced setup, the transport protocol termination components must be configured appropriately.

- For the JMS transport endpoints, load balancing of the requests across multiple Core Engines is achieved by setting up non-exclusive queues in the JMS server. This type of setup automatically balances the load of incoming messages across the JMS receivers of the Core Engine instances.

- For the HTTP transport endpoints, load balancing of the requests across multiple Core Engines is handled by the Module for the Apache HTTP server. If a comma-separated list of TIBCO Rendezvous subjects is configured for an Apache server location, the Module for the Apache HTTP server load balances the incoming requests across the list of Rendezvous subjects. For each deployed Core Engine instance, a different Rendezvous subject from the list must be configured to ensure that requests are handled only once by a single Core Engine instance.

When the protocol termination components reach the limits of the scale they can provide, an IP load balancer can be added to the deployment in front of multiple Apache HTTP servers or JMS servers. The load balancer should be configured to make the Apache HTTP servers or JMS servers available on a single IP address.

High Availability of TIBCO API Exchange Gateway

For a high available setup of the TIBCO API Exchange Gateway deployment, the configuration setup of the components in the Gateway Operational Layer is different from the setup of components in the Gateway Management Layer.

Gateway Operational Layer

As the Core Engine and Apache HTTP server maintain no state, fault tolerance is provided by multiple engine instances running across sites and the host servers with the same configuration supporting a load balanced configuration. See Load Balancing.

A fault-tolerant setup for JMS endpoints of TIBCO API Exchange Gateway leverages the fault-tolerant setup capabilities of the TIBCO Enterprise Message Service. See TIBCO Enterprise Message Service™ User’s Guide for details.

Fault tolerance of Cache Agents is handled transparently by the object management layer. For the fault tolerance of cache data, the only configuration task is to define the number of backups you want to keep, and to provide sufficient storage capacity. Cache Agents are used only to implement the association cache. The association cache is automatically rebuilt after complete failure when new transactions are handled by TIBCO API Exchange Gateway. Therefore, Cache Agents do not require a backing store.

Gateway Management Layer

The components of the Gateway Management Layer must be deployed once in a primary-secondary group configuration. The Central Logger and the Global Throttle Manager must have a single running instance at all times to ensure that the Core Engine operates without loss of functionality.

Therefore the Central Logger and Global Throttle Manager must be deployed in fault-tolerant configuration with one active instance engine and one or more standby agents on separate host servers. Such fault-tolerant engine setup can be configured in the cluster deployment descriptor (CDD) file by specifying the maximum number of one active agent for either of the agent classes and by creating multiple processing unit configurations for both the Global Throttle Manager and the Central Logger agent. Deployed standby agents maintain a passive Rete network. They do not listen to events from channels and they do not update working memory. They take over from an active instance in case it fails.

The other components of the Gateway Management Layer have no direct impact on the functionality of an operating Core Engine instance, and they can be deployed with a cold standby configuration. This applies to the following components:

Deploy multiple versions of these components across host servers with one instance running. If the running instance goes down, start one of the other instances to regain complete gateway functionality.