Node quorum monitoring is controlled by configuration. Node

quorum monitoring is enabled using the nodeQuorum

configuration option. By default, node quorum monitoring is

disabled.

When node quorum monitoring is enabled, the number of active application nodes required for a quorum is determined using one of these methods:

![[Note]](images/note.png) | |

Domain manager nodes are not included in node quorum calculations. Only application nodes count towards a node quorum. |

When node quorum monitoring is enabled, high availability services

are Disabled if a node quorum is not met. This ensures

that a partition can never be active on multiple nodes. When a node quorum

is restored, the node state is set to Partial or

Active depending on the number of active remote nodes

and the node quorum mechanism being used. Once a quorum has been

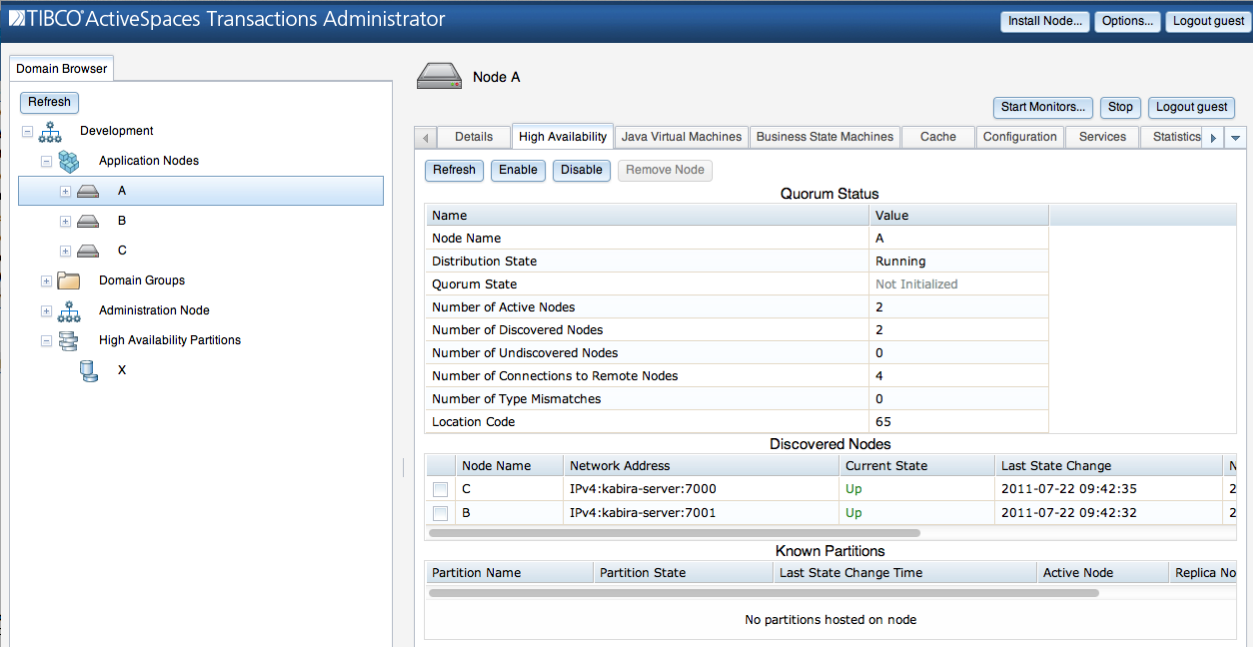

reestablished partitions must be migrated back to the node. See the section called “Migrating partitions”. The current quorum state is

displayed on the High Availability tab for a node (see

Figure 6.1, “Distribution status” for an example).

When using the minimum number of active remote nodes to determine a

node quorum, the node quorum is not met when the number of active remote

nodes drops below the configured

minimumNumberQuorumNodes configuration value.

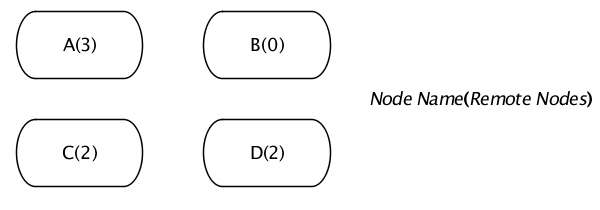

Figure 6.17, “Minimum node quorum cluster” shows a four node example cluster using minimum number of remote nodes to determine node quorum. Each node shows their configured minimum remote node values.

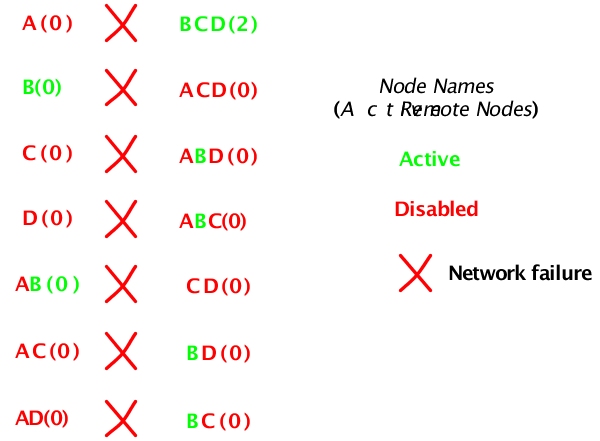

Figure 6.18, “Minimum node quorum status - network failures” shows different scenarios based on network failures. All machines remain active. For each case the disabled and active nodes are shown, along with the total number of visible remote nodes, for the sub-cluster caused by the network failure.

When using voting percentages, the node quorum is not met when the

percentage of votes in a cluster drops below the configured

nodeQuorumPercentage value. By default each node is

assigned one vote. However, this can be changed with the

nodeQuorumVoteCount configuration value. This allows

certain nodes to be given more weight in the node quorum calculation by

assigning them a larger number of votes.

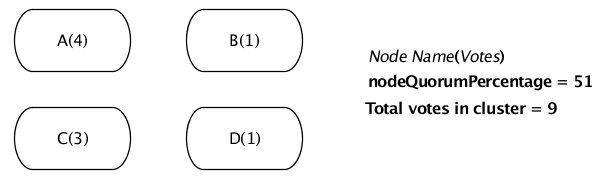

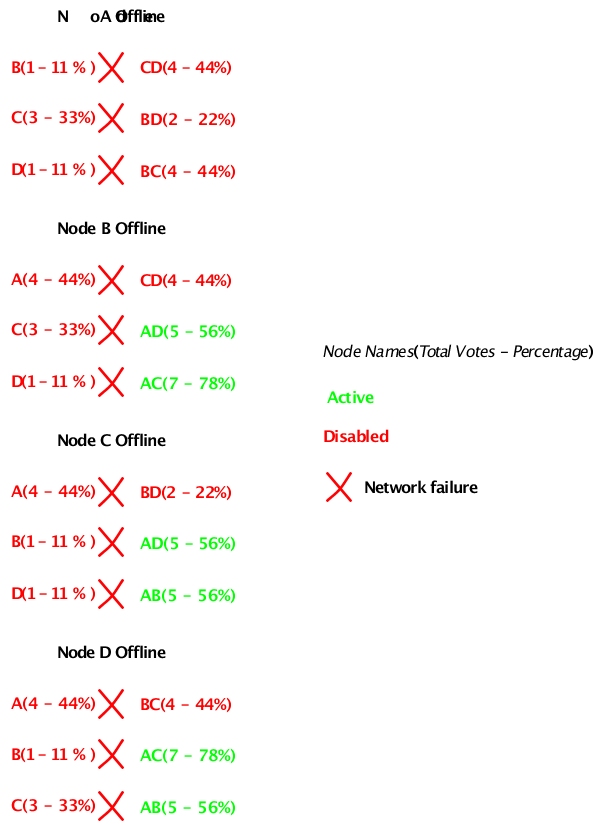

Figure 6.19, “Voting quorum cluster” shows a four node example cluster using voting percentages to determine node quorum. Each node shows their configured voting values. The node quorum percentage is set at 51%.

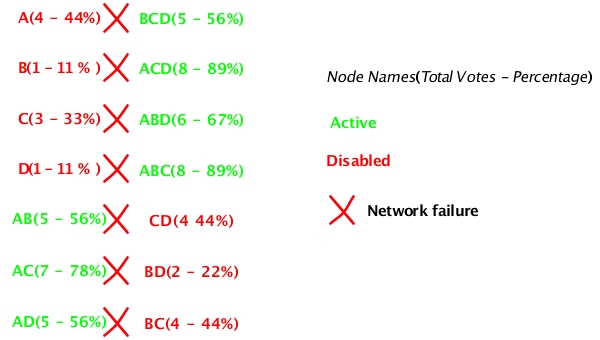

Figure 6.20, “Voting node quorum status - network failures” shows different scenarios based on network failures. All machines remain active. For each case the disabled and active nodes are shown, along with the total number of votes, and percentage, for the sub-cluster caused by the network failure.

Figure 6.21, “Voting node quorum status - network and machine failures” shows different scenarios based on network and machine failures. For each case the disabled and active nodes are shown, along with the total number of votes, and percentage, for the sub-cluster caused by the network failure. This shows the advantages of using voting to determine node quorum status - the cluster can remain active following a single failure, while still allowing the cluster to be disabled if multiple nodes or networks fail.

When a new high availability configuration is activated that changes the node quorum values the changes are not immediate. All changes are immediately propagated to all nodes in the cluster, but they do not take affect until a node leaves and rejoins the cluster, or a remote node fails. This ensures that a misconfiguration does not cause nodes to be taken offline unexpectedly.

The configuration values for node quorum are summarized in Table 6.2, “High availability configuration”.

There are cases where an application can tolerate

operating with partitions active on multiple nodes for periods of time.

If this is acceptable behavior for an application, the

nodeQuorum configuration option should be set to

disabled. When node quorum is disabled, the

administrator must manually restore the cluster when the connectivity

problem has been resolved.

The cluster partition summary display (see Figure 6.4, “Cluster partition summary”) can be used to determine if partitions are active on multiple nodes. Before attempting to restore the partitions active on multiple nodes, connectivity between all nodes must have been reestablished. See the section called “Node connectivity” for details on determining the status of node connectivity in a cluster.

The following steps are required to restore a cluster with partitions active on multiple nodes:

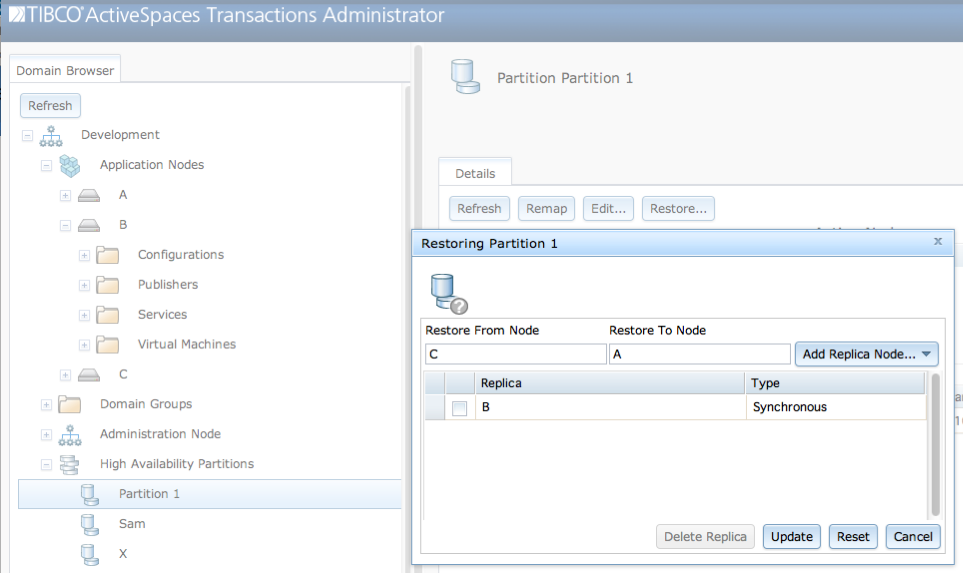

Define how to restore all partitions active on more than one node (see Figure 6.22, “Restoring a partition”).

Enable partitions on all nodes specified in the

Restore To Nodefield in the restore partition dialog (see Figure 6.23, “Enabling partitions”).

Figure Figure 6.22, “Restoring a partition” shows partition

X being restored from node C to

node A. The partition must currently be active on

both the from and to node specified in the restore node dialog. When

partitions are enabled on node A, the partition

objects will be merged with the objects on node

C.

Clicking on the Enable button on the

High Availability tab for a node (see Figure 6.23, “Enabling partitions”), causes all partitions being

restored to this node to be merged with the partition specified as the

from node in the restore partition dialog, and then the partition is

made active on this node.

When these steps are complete, the cluster has been restored to service and all partitions now have a single active node.

Restoring a cluster after a multi-master scenario can also be performed using these commands.

administrator servicename=A define partition name="Partition 1" activenode=A replicas=B,C restorefromnode=C administrator servicename=A join cluster type=merge