Connect to a MapR 4.x Data Source

This topic describes how to configure Team Studio to connect to a MapR 4.x data source.

Procedure

-

Edit the

$CHORUS_HOME/shared/chorus.properties file and add the below listed option into the list of values for the

java_options parameter for dynamically loading the MapR FS libraries:

java_options = -Djava.library.path=$CHORUS_HOME/vendor/hadoop/lib -Djava.security.egd=file:/dev/./urandom -server -Xmx4096m -Xms2048m -Xmn1365m -XX:MaxPermSize=256m -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:ParallelGCThreads=3 -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=./ -XX:+CMSClassUnloadingEnabled

-

Make sure that

Team Studio can resolve the DNS names of all cluster nodes. Configuring the

/etc/hosts file might be necessary:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.27.0.2 chorus.alpinenow.local chorus 172.27.0.4 mapr4a.alpinenow.local mapr4a 172.27.0.5 mapr4b.alpinenow.local mapr4b 172.27.0.6 mapr4c.alpinenow.local mapr4c

-

Either add mapr user/group with the uid 505 and gid 505 on the

Team Studio computer, or

Team Studio user/group with uid 507 and gid 507 on all MapR nodes. All mapR clients should use the same uid and gid to ignore permission issues.

groupadd mapr --gid 505 useradd mapr --gid 505 --uid 505 groupadd chorus --gid 507 useradd chorus --gid 507 --uid 507

-

The MapR 4.0.1 client must be installed and configured on the

Team Studio computer so

Team Studio can communicate with the MapR cluster (version 4.0.1 or 4.1.0). For more information about MapR 4.0.1 client installation, see

this page. After you install the MapR 4.0.1 client, copy the native libraries into the directory that we configured in the

chorus.properties file:

cp /opt/mapr/hadoop/hadoop-2.4.1/lib/native/* $CHORUS_HOME/vendor/hadoop/lib/

Then edit the yarn-site.xml and mapred-site.xml files (from /opt/mapr/hadoop/hadoop-2.4.1/etc/hadoop directory) and be sure to use the correct host names (in the below examples, mapr4x.alpinenow.local host names are used with 2 failover resource managers).mapred-site.xml:<configuration> <property> <name>mapreduce.jobhistory.address</name> <value>mapr4c.alpinenow.local:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>mapr4c.alpinenow.local:19888</value> </property> </configuration>yarn-site.xml:

<configuration> <!-- Resource Manager HA Configs --> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.ha.automatic-failover.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.ha.automatic-failover.embedded</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.cluster-id</name> <value>yarn-mapr41.alpinenow.local</value> </property> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property> <property> <name>yarn.resourcemanager.ha.id</name> <value>rm1</value> </property> <property> <name>yarn.resourcemanager.zk-address</name> <value>mapr4a.alpinenow.local:5181,mapr4b.alpinenow.local:5181,mapr4c.alpinenow.local:5181</value> </property> <!-- Configuration for rm1 --> <property> <name>yarn.resourcemanager.scheduler.address.rm1</name> <value>mapr4a.alpinenow.local:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address.rm1</name> <value>mapr4a.alpinenow.local:8031</value> </property> <property> <name>yarn.resourcemanager.address.rm1</name> <value>mapr4a.alpinenow.local:8032</value> </property> <property> <name>yarn.resourcemanager.admin.address.rm1</name> <value>mapr4a.alpinenow.local:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm1</name> <value>mapr4a.alpinenow.local:8088</value> </property> <property> <name>yarn.resourcemanager.webapp.https.address.rm1</name> <value>mapr4a.alpinenow.local:8090</value> </property> <!-- Configuration for rm2 --> <property> <name>yarn.resourcemanager.scheduler.address.rm2</name> <value>mapr4b.alpinenow.local:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address.rm2</name> <value>mapr4b.alpinenow.local:8031</value> </property> <property> <name>yarn.resourcemanager.address.rm2</name> <value>mapr4b.alpinenow.local:8032</value> </property> <property> <name>yarn.resourcemanager.admin.address.rm2</name> <value>mapr4b.alpinenow.local:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm2</name> <value>mapr4b.alpinenow.local:8088</value> </property> <property> <name>yarn.resourcemanager.webapp.https.address.rm2</name> <value>mapr4b.alpinenow.local:8090</value> </property> <!-- :::CAUTION::: DO NOT EDIT ANYTHING ON OR ABOVE THIS LINE --> </configuration>Note: If your are not sure which values to use for the above listed parameters, navigate to your MapR cluster console from your web browser: https://your_mapr_cluster_host:8443 . -

Edit the

$CHORUS_HOME/shared/ALPINE_DATA_REPOSITORY/configuration/alpine.conf file and make sure that the mapr4 agent is enabled:

alpine { chorus { # scheme = HTTP # host = myhostname //change to other hostname # port = 9090 //change to other port # debug = true //change to true for debugging } hadoop.version.cdh4.agents.2.enabled=false hadoop.version.cdh5.agents.4.enabled=false hadoop.version.cdh53.agents.7.enabled=false hadoop.version.phd2.agents.1.enabled=false hadoop.version.phd3.agents.8.enabled=false hadoop.version.mapr4.agents.6.enabled=true hadoop.version.mapr3.agents.3.enabled=false hadoop.version.hdp2.agents.5.enabled=false hadoop.version.hdp22.agents.8.enabled=false #( same agent as phd3) hadoop.version.iop.agents.8.enabled=false #( same agent as phd3) hadoop.version.cdh54.agents.9.enabled=false } -

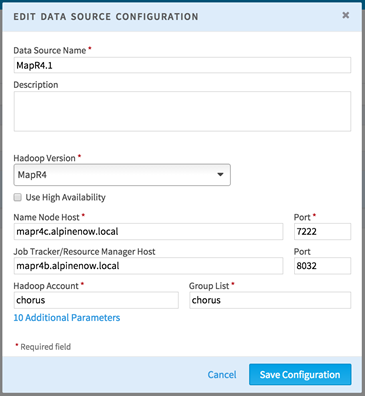

After the preceding steps are done, restart

Team Studio and navigate to the Data Source configuration page from your web browser. Create a new Hadoop connection and select MapR4 from the

Hadoop Version drop-down list. Then configure the connection with the correct parameters:

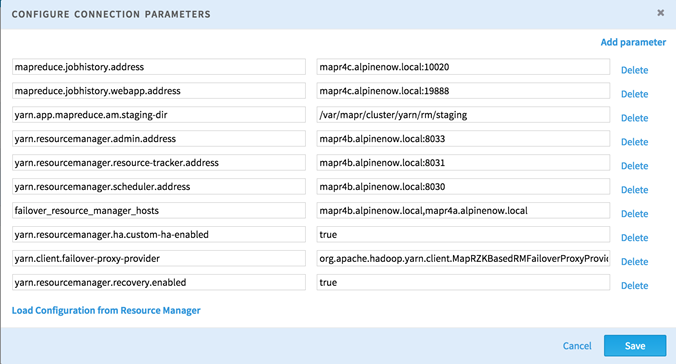

Also, configure the additional parameters as follows by clicking the Additional Parameters link:

mapreduce.jobhistory.address mapr4c.alpinenow.local:10020 mapreduce.jobhistory.webapp.address mapr4c.alpinenow.local:19888 yarn.app.mapreduce.am.staging-dir /var/mapr/cluster/yarn/rm/staging yarn.resourcemanager.admin.address mapr4b.alpinenow.local:8033 yarn.resourcemanager.resource-tracker.address mapr4b.alpinenow.local:8031 yarn.resourcemanager.scheduler.address mapr4b.alpinenow.local:8030 mapreduce.job.map.output.collector.class org.apache.hadoop.mapred.MapRFsOutputBuffer mapreduce.job.reduce.shuffle.consumer.plugin.class org.apache.hadoop.mapreduce.task.reduce.DirectShuffle - If the MapR4 cluster has HA enabled for Resource Manager, also add the following parameter in addition to the above parameters. The value should be a comma-separated list of available resource manager host names.

- If zero configuration failover is enabled in the MapR4 cluster, add the following parameters in addition to the above parameters:

Related tasks

Copyright © Cloud Software Group, Inc. All rights reserved.