Collapse

Transforms the data contained in a column of a table by means of subtotals (or other calculations) that are defined by another column in the same list. The other calculations might be averages and counts. The result is a collapsed or condensed data set.

Information at a Glance

|

Parameter |

Description |

|---|---|

| Category | Transform |

| Data source type | HD |

| Send output to other operators | Yes |

| Data processing tool | MapReduce |

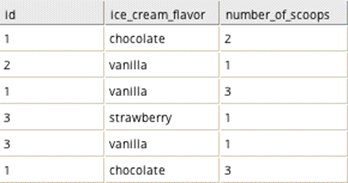

For example, see the following data set.

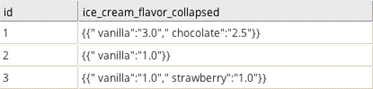

To determine the average number of scoops per flavor a customer orders, group by id, and aggregate "average" with the aggregation column number_of_scoops. The resulting data set is shown below.

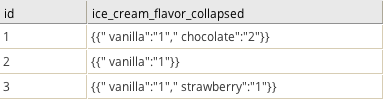

To just determine how many times a customer ordered each flavor, group by id and aggregate "count" (no aggregation column is necessary). The resulting data set is shown below.

This is similar to the Pivot (DB) operator, except that the end results are stored in one column instead of pivoted out to (n-1) columns, where n is the number of categorical values in the pivot/collapse column.

Input

A data set from the preceding operator.

Configuration

| Parameter | Description |

|---|---|

| Notes | Notes or helpful information about this operator's parameter settings. When you enter content in the Notes field, a yellow asterisk appears on the operator. |

| Columns to Collapse | Define the rules for collapsing columns. |

| Group By | Select the columns to group. |

| Store Results? | Specifies whether to store the results.

|

| Results Location | The HDFS directory where the results of the operator are stored. This is the main directory, the subdirectory of which is specified in Results Name. Click Choose File to open the Hadoop File Explorer dialog and browse to the storage location. Do not edit the text directly. |

| Results Name | The name of the file in which to store the results. |

| Overwrite | Specifies whether to delete existing data at that path and file name.

|

| Compression | Select the type of compression for the output.

Available Parquet compression options are the following.

Available Avro compression options are the following.

|

| Use Spark | If Yes (the default), uses Spark to optimize calculation time. |

| Advanced Spark Settings Automatic Optimization |

|

Output

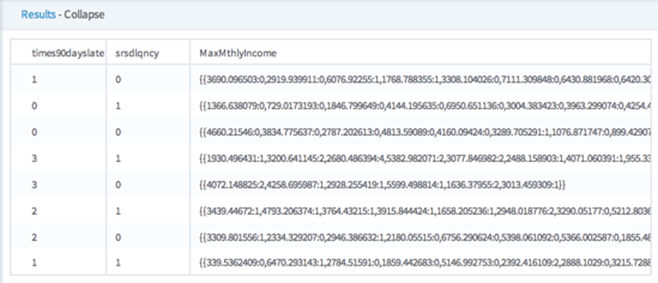

The data rows of the output table/view displayed (small sample).

A data set of the newly created file. The collapsed column is of type "sparse."

Additional Notes

Sparse columns are typically used with Naive Bayes, SVM, and Association Rules operators. Other operators accept sparse data types, but treat the values as strings.