Contents

The High Availability Shared Disk Access (HA2) sample shows the following:

-

How to separate high availability logic from the rest of the application logic.

-

How to ensure that an application is running on one or the other of two servers, where the application requires access to a disk-based Query Table.

-

How to test for the presence of an application in another container and start the application if it is not found.

-

How to use the External Process operator to run commands specific to high availability design patterns.

Note

This sample requires the use of disk Query Tables. It will not run if you have a StreamBase Developer or Evaluation license.

In this sample, there are two servers. Each server may have a pair of containers—one

container (ha) runs the high availability application

(hadisk.sbapp), and the other container (app) runs an application (qtapp.sbapp)

that performs Query Table operations. When qtapp is

running, its server is the leader and has the lock to the disk-based Query Table.

Each running server always has an ha container, and one

of the servers also has an app container. The

hadisk application running in each ha container makes sure that one and only one qtapp is running. The qtapp has the

lock required to have access to the data on disk.

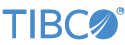

The following conceptual illustration shows the relationship among the applications, the containers, the servers, and the data directory on disk:

|

In this illustration, the two servers are A

and B, and A starts first,

as follows:

-

When

Astarts it creates a container,ha, and starts runninghadisk, the high availability application. -

A metronome operator in

hadisksends a tuple once every second to determine if there is a container namedapp. -

If the

appcontainer does not exist onA,hadiskonAattempts to add it. If the attempt is successful,Ahas a second container, namelyapp, runningqtapp. (BecauseBis not running at this point,hadiskonAis able to add a container toAand thereby startsqtapprunning.) -

At this point server

Ahas the lock on the data in the disk-based Query Table and is designated as the leader.

Server B starts in the same way as A, creating an ha container, running

hadisk, and attempting to add another container running

qtapp if none exists. In this case, when B starts, it is unable to add a second container because it is

unable to get the lock. Because A is running

qtapp, hadisk on

B fails in its attempt to add a container running

qtapp.

If A stops running, hadisk

running on B is able to add the second container. In

this case, a container is added to B and qtapp starts on

B. Then, and only then, B has two containers running and

B has the lock for the data on disk. The application on B keeps the lock as long as B

is running. If A starts running again while qtapp is running on B, A is unable to add the app container

because A is unable to get the lock.

See the following topics for more details on the application files, the configuration file, and the scripts:

The hadisk application is designed to make sure that

qtapp is always running on one server or the other. It

also ensures that one and only one server has the lock to the data on disk at any

given time.

The hadisk application uses External Process operators

to perform operations that can be generated only by commands external to the

servers A and B. For

instance, DescribeAppContainer, an External Process

operator, issues the following command:

sbadmin -u

name_of_uri/system describe app

If this command fails, it is because the app container

does not exist

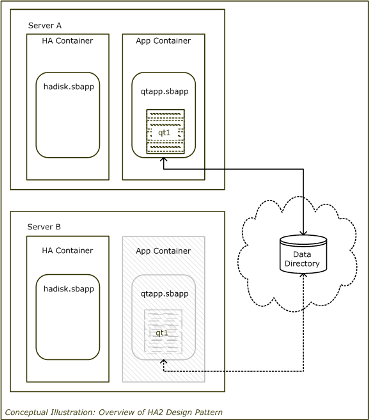

The following EventFlow Editor sample shows hadisk.sbapp:

|

As shown in the EventFlow Editor, the hadisk application does the following:

-

Sends a tuple once every second using

OnceASecond, a metronome operator. -

Uses

DescribeAppContainer, an External Process operator, to determine whetherqtappis running. -

In the case where

qtappis not running,AppContainerExists, a filter, proceeds toAddAppContainer, which is another External Process operator. -

AddAppContainerattempts to add a container forqtapp. If it succeeds, the server running this instance ofhadiskhas the lock. -

SetLeader, another External Process operator, designates the server with the lock as the leader.

The following table lists the operators in hadisk.sbapp, along with a description of each.

| Operator Name | Operator Type | Usage |

|---|---|---|

| OnceASecond |

Metronome operator |

Sends a tuple once per second. |

| AddVariables | Map operator |

Adds stream variables (uri, app, qtdir) that

are required by the External Process operators.

|

| DescribeAppContainer | External Process operator |

Uses the sbadmin describe

command to determine if there is a container running qtapp. The command is as follows:

|

| AppContainerExists | Filter operator | Controls the next step in the process based on whether there is a container (that is, responding to the failure or success of sbc describe command). |

| AddAppContainer | External Process operator |

If no container exists, uses the sbadmin

addContainer command to add the container. The command is

as follows:

|

| AppContainerAdded | Filter operator | Controls the next step of the process, based on whether the sbadmin addContainer command failed or succeeded. If it succeeds, its server becomes the leader. |

| SetLeader | External Process operator | If the sbadmin addContainer command succeeds, sets the leadership status for its server to LEADER. |

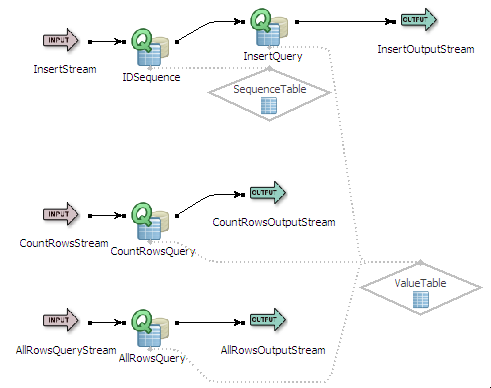

The qtapp application holds two Query Tables,

SequenceTable and ValueTable. ValueTable contains IDs

and names based on the values on disk. Because only one application can have the

lock to the shared disk, only one instance of qtapp

can run at a time.

See the following example of the EventFlow Editor displaying qtapp:

|

The following table lists the components in qtapp,

along with a description of each.

The configuration file, ha.sbconf, holds the

configuration options that are used to start sbd. The

same configuration file is used for both servers in in the HA2 sample. It specifies

the parameters for <server>, <high-availability>, and <runtime>.

The server section specifies the interval for flushing

the Query Tables.

The high-availability section specifies the leadership

status for each server, which is NON_LEADER. After qtapp starts on a server the server becomes the leader. A server

remains the leader until it shuts down.

The runtime section specifies the high availability

application file as the application that starts up the sample. The high

availability application has been precompiled as hadisk.sbar, and it starts in a container named ha.

The sample includes startup scripts to start the primary server, start the secondary server, start a feed simulation, and start a dequeue from all streams.

The sample includes several scripts that you can use for system administration. For instance, you can check the status of the servers as well as the status of the lock on the data directory on disk. You can also run a script that clears the lock. These scripts are useful if a server stops running while it has the lock, for example.

The application and configuration files delivered with the HA2 sample are described in the following table.

| File Name | Description |

|---|---|

hadisk.sbapp

|

High availability application, which runs on both servers |

ha.sbconf

|

Configuration file for both servers |

qtapp.sbapp

|

The main application holding Query Tables |

The scripts delivered with the HA2 sample are described in the following table.

| Windows Script | UNIX Script | Purpose |

|---|---|---|

startprimary.cmd

|

startprimary

|

Generates precompiled versions (.sbar files) for

the applications.

Starts the first StreamBase Server instance listening on port

9900 hadisk application into a container

named ha. Uses instructions in the ha.sbconf configuration.

|

startsecondary.cmd

|

startsecondary

|

Starts a second StreamBase Server instance listening on port 9901. Loads the hadisk application

into a container named ha. Uses instructions in

the ha.sbconf configuration.

|

startfeedsim.cmd

|

startfeedsim

|

Runs the sbfeedsim command to

send input data to the input stream of the qtapp

application running in a container named app.

The script sends to both servers at the same time, but only one server at a

time has qtapp running.

|

startdequeue.cmd

|

startdequeue

|

Runs sbc dequeue to begin

dequeuing from all streams of the qtapp

application. The script sends to both servers at the same time, but only one

server at a time has qtapp running.

|

common.cmd

|

common

|

A support script called by startprimary and

startsecondary. There is no need to run this

script directly. For Windows and UNIX, this script sets environment

variables.

On UNIX, this script also generates precompiled archive files from this sample's EventFlow application files. This occurs the first time a calling script is run, and whenever the EventFlow application files change. |

createsbar.cmd

|

-- |

Windows only.

A support script called by If you change either EventFlow application file, run this script without arguments to regenerate the archive files. |

checklock.cmd

|

checklock

|

Checks status of servers and the lock. |

clearlock.cmd

|

clearlock

|

Checks status of servers and the lock and clears the lock if necessary. You rarely need to run this script. |

testlock.cmd

|

testlock

|

A support script called by checklock and

clearlock. Do not run this script directly.

|

This sample is designed to run in UNIX terminal windows or Windows command prompt windows because StreamBase Studio cannot run more than one instance of a server. Nonetheless, it is recommended that you open the application files in StreamBase Studio to study how the applications are assembled. For an introduction to the design of the sample, see the overview.

This section shows how to get the sample running and suggests some steps to show how the servers, the containers, and the applications work together to ensure high availability. You can, of course, try out other steps of your own, and also use the sample as a starting point for a design pattern that meets your particular requirements.

To get the sample up and running you use scripts that do the following:

-

Start the servers.

-

Start a feed simulation to all streams.

-

Start a dequeue from all streams.

You can use other scripts for troubleshooting the lock on the data.

Because StreamBase Studio can run only one server instance at a time, you must run the two servers in the HA2 sample in terminal or StreamBase Command Prompt windows.

Note

On Windows, be sure to use the StreamBase Command Prompt from the Start menu as described in the Test/Debug Guide, not the default command prompt.

The steps to run the sample are as follows:

-

Set up five terminal windows.

-

Open five terminal windows on UNIX, or five StreamBase Command Prompts on Windows.

-

In each window, navigate to the directory where the sample is installed, or to your workspace copy of the sample.

-

-

Start the following processes:

-

In window 1, start the first server with this command:

startprimary.This script generates precompiled versions of the applications, starts a server listening on port 9900, and loads the high availability application (

hadisk) in a container,ha, on that server. The high availability application designates this server as the leader and startsqtapp. You can observe this outcome by running the following command in window 5:sbadmin -u :9900 list containers

This command generates the following output:

container app container

hacontainer system -

In window 2, run

startsecondary.Similarly, this script starts a server listening on port 9901 and loads the high availability application in a container,

ha, on that server. Onlyhadiskis running on the server in window 2.sbadmin -u :9901 list containers

This command generates the following output:

container ha container system

-

In window 3, run

startfeedsim.A feed simulation starts sending input data to both servers. Observe that errors are generated in window 2. You can ignore these errors, which are warnings generated because the feed simulation is attempting to enqueue to both servers and failing on this server because

qtappis not running. -

In window 4, run

startdequeue.Dequeuing from

qtappbegins. At this point qtapp is running only on the server in window 1.

-

-

In window 5, run the following command to determine the status of both servers:

sbc -u :9900,:9901 status --verbose

This command generates a status report line for each server. Notice that the server on port 9900, which is running in window 1, is designated as the leader.

-

Test the high availability application by terminating the server in window 1.

-

In window 5, run the following command:

sbadmin -u :9900 shutdown

In window 1 the server shuts down. In window 2 the warning messages about enqueue failures stop. In window 3 the feed simulation stops. (You can ignore the error messages in window 3.) Both applications,

hadiskandqtapp, are now running on the server in window 2. -

In window 5, verify that both applications are running in window 2, and not window 1, by issuing the following commands, one for each server:

sbadmin -u :9901 list containers

This command generates the following output:

container app container ha container system

sbadmin -u :9900 list containers

This command generates the following output:

sbadmin: Unable to connect to ...

-

In window 5, run the following command to determine the status of the server running in window 2:

sbc -u :9901 status --verbose

Notice that the server on port 9901, which is running in window 2, is designated the leader.

-

In window 4, notice that dequeuing has stopped. This occurs because the feed simulation process has stopped.

-

In window 3, start the feed simulation by running

startfeedsim. -

In window 4, observe that

qtappstarts dequeuing results. -

In window 1, start the server again by running

startprimary.Observe that the server starts but is unable to enqueue. This is because

qtappis not running on this server; the server in window 2 is the leader now.

-

-

Use the troubleshooting scripts to check and clear the locks.

-

In window 2, terminate the server abruptly by pressing Ctrl+C.

-

In window 5, find out the status of the lock by running the following command:

checklock

-

In StreamBase Studio, import this sample with the following steps:

-

From the top menu, select → .

-

Select ha2 from the High Availability list.

-

Click OK.

StreamBase Studio creates a single project containing the sample files. The default

project name is sample_ha2.

When you load the sample into StreamBase Studio, Studio copies the sample project's files to your Studio workspace, which is normally part of your home directory, with full access rights.

Important

Load this sample in StreamBase Studio, and thereafter use the Studio workspace copy of the sample to run and test it, even when running from the command prompt.

Using the workspace copy of the sample avoids the permission problems that can occur when trying to work with the initially installed location of the sample. The default workspace location for this sample is:

studio-workspace/sample_ha2

See Default Installation

Directories for the location of studio-workspace on your system.

In the default TIBCO StreamBase installation, this sample's files are initially installed in:

streambase-install-dir/sample/ha2

See Default Installation

Directories for the location of streambase-install-dir on your system. This location

may require administrator privileges for write access, depending on your platform.