Adding a Hadoop Data Source from the User Interface

To add an HDFS data source, first make sure the Team Studio server can connect to the hosts, and then use the Add Data Source dialog box to add it to Team Studio.

Prerequisites

You must have data administrator or higher privileges to add a data source. Ensure that you have the correct permissions before continuing.

Procedure

- From the menu, select Data.

- Select Add Data Source.

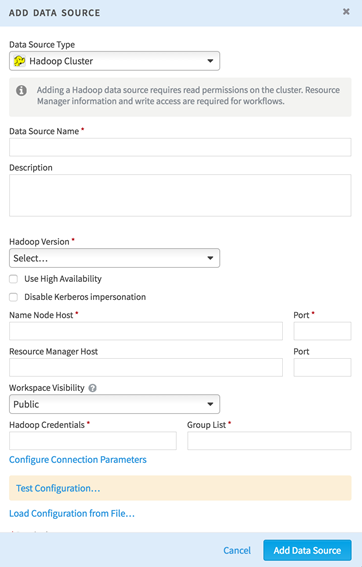

-

Choose

Hadoop Cluster as the data source type.

-

Specify the following data source attributes:

Data Source Name Set a user-facing name for the data source. This should be something meaningful for your team (for example, "Dev_CDH5_cluster"). Description Enter a description for your data source. Hadoop Version Select the Hadoop distribution that matches your data source. Use High Availability Check this box to enable High Availability for the Hadoop cluster. Disable Kerberos Impersonation If this box is selected and you have Kerberos enabled on your data source, then the workflow uses the user account configured as the Hadoop Credentials here. If this box is cleared, the workflow uses the user account of the person running the workflow.

If you do not have Kerberos enabled on your data source, you do not need to select this box. All workflows run using the account configured as the Hadoop Credentials.

NameNode Host Enter a single active NameNode to start. Instructions for enabling High Availability are in Step 10. To verify the NameNode is active, check the web interface. (The default is http://namenodehost.localhost:50070/)

NameNode Port Enter the port that your NameNode uses. The default port is 8020. Job Tracker/Resource Manager Host For MapReduce v1, specify the job tracker. For YARN, specify the resource manager host. Job Tracker/Resource Manager Port Common ports are 8021, 9001, 8012, or 8032. Workspace Visibility There are two options here: To learn more about associating a data source to a workspace, see Data Visibility.

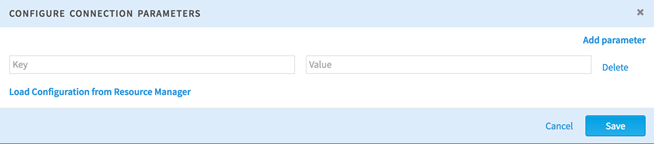

Hadoop Credentials Specify the user or service to use to run MapReduce jobs. This user must be able to run MapReduce jobs from the command line. Group List Enter the group to which the Hadoop account belongs. - For further configuration, choose Configure Connection Parameters.

-

Specify key-value pairs for YARN on the

Team Studio server. Selecting

Load Configuration from Resource Manager attempts to populate configuration values automatically.

Note: Be sure the directory specified above in the staging-dir variable is writable by the Team Studio user. Spark jobs produce errors if the user cannot write to this directory.Required if different from default:-

yarn.application.classpath

- The yarn.application.classpath does not need to be updated if the Hadoop cluster is installed in a default location.

- If the Hadoop cluster is installed in a non-default location, and the yarn.application.classpath has a value different from the default, the YARN job might fail with a "cannot find the class AppMaster" error. In this case, check the yarn-site.xml file in the cluster configuration folder. Configure these key:value pairs in the UI using the Configure Connection Parameters option.

- yarn.app.mapreduce.job.client.port-range

Recommended:- mapreduce.jobhistory.address = FQDN:10020

- yarn.resourcemanager.hostname = FQDN

- yarn.resourcemanager.address = FQDN

- yarn.resourcemanager.scheduler.address = FQDN:8030

- yarn.resourcemanager.resource-tracker.address = FQDN:8031

- yarn.resourcemanager.admin.address = FQDN:8033

- yarn.resourcemanager.webapp.address = FQDN:8088

- mapreduce.jobhistory.webapp.address = FQDN:19888

-

yarn.application.classpath

-

Save the configuration.

Note: Additional configuration might be needed for different Hadoop distributions.

To connect to a Cloudera (CDH) cluster, follow the instructions above.

To connect to MIT KDC Kerberized clusters:

To connect to a MapR cluster:

To connect to a PHD cluster:

To connect to a YARN Enabled cluster:

If you do not have all of these parameters yet, you can save your data source as "Incomplete" while working on it. For more information, see Data Source States.

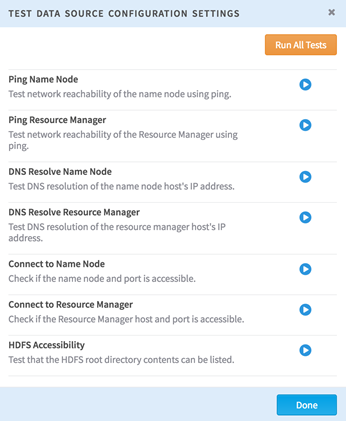

-

To perform a series of automated tests on the data source, click

Test Connection.

- Click Save Configuration to confirm the changes.

-

When the connectivity to the active NameNode is established above, set up NameNode High Availability (HA) if enabled.

Required:

- dfs.ha.namenodes.nameservice1

- dfs.namenode.rpc-address.nameservice1.namenode<id> (required for each namenode id)

- dfs.nameservices

- dfs.client.failover.proxy.provider.nameservice1

Note: Support for Resource Manager HA is available.To configure this, add failover_resource_manager_hosts to the advanced connection parameters and list the available Resource Managers.

If one of the active Resource Managers fails during a job running, you must re-run the job, but you no longer must reconfigure the data source that failed. If one of the active Resource Managers fails while a job is not running, you do not need to do anything. Team Studio uses another available Resource Manager instead.