This chapter provides high-availability topology examples and their required node deploy configuration.

Example 2, “Application definition configuration” shows the application definition that is used by all of the topology examples. This configuration defines:

-

a fragment named

com.tibco.ep.dtmexamples.javafragment.helloworld. -

dynamic data distribution policies named

dynamic-data-distribution-policy,dynamic-credit-distribution-policy, anddynamic-debit-distribution-policy. -

a static data distribution policy named

static-data-distribution-policy.

Both data distribution policies use a distributed hash data mapper to distribute the application data.

There is also a custom quorum notifier defined in the fragment. The behavior of this notifier is application specific and is not exposed in the configurable quorum policies.

Example 1. Fragment definition configuration

name = "com.tibco.ep.dtmexamples.javafragment.helloworld"

version = "1.0.0"

type = "com.tibco.ep.dtm.configuration.application"

configuration =

{

FragmentDefinition =

{

description = "hello world fragment"

customQuorumNotifiers =

{

"my-quorum-notifier" =

{

notifierClassName = "com.tibco.ep.dtmexamples.javafragment.helloworld.QuorumNotifier"

}

}

dataMappers =

{

"dynamic-data-mapper" =

{

distributedHashDataMappers =

[

{

className = "com.tibco.ep.dtm.HelloWorld"

key = "ByName"

}

]

},

"dynamic-credit-data-mapper" =

{

roundRobinDataMappers =

[

{

className = "com.tibco.ep.dtm.payments.Credit"

}

]

},

"dynamic-debit-data-mapper" =

{

roundRobinDataMappers =

[

{

className = "com.tibco.ep.dtm.payments.Debit"

}

]

},

"static-data-mapper" =

{

distributedHashDataMappers =

[

{

className = "com.tibco.ep.dtm.WorldHello"

field = "name"

}

]

}

}

}

}Example 2. Application definition configuration

name = "MyApplication"

version = "1.0.0"

type = "com.tibco.ep.dtm.configuration.application"

configuration =

{

ApplicationDefinition =

{

dataDistributionPolicies =

{

"dynamic-data-distribution-policy" =

{

type = "DYNAMIC"

dataMappers =

[

"dynamic-data-mapper"

]

customQuorumNotifiers =

[

"my-quorum-notifier"

]

dynamicDataDistributionPolicy =

{

numberOfPartitions = 3

primaryDataRedundancy =

{

numberOfReplicas = 6

replicationType = SYNCHRONOUS

}

backupDataRedundancy =

{

numberOfReplicas = 4

replicationType = ASYNCHRONOUS

}

}

},

"dynamic-credit-distribution-policy" =

{

type = "DYNAMIC"

dataMappers =

[

"dynamic-credit-data-mapper"

]

},

"dynamic-debit-distribution-policy" =

{

type = "DYNAMIC"

dataMappers =

[

"dynamic-debit-data-mapper"

]

},

"static-data-distribution-policy" =

{

type = "STATIC"

dataMappers =

[

"static-data-mapper"

]

disableAction = "LEAVE_CLUSTER_FORCE"

enableAction = "JOIN_CLUSTER"

forceReplication = false

numberOfThreads = 1

objectsLockedPerTransaction = 1001

remoteEnableAction = "ENABLE_PARTITION"

staticDataDistributionPolicy =

{

replicaAudit = "WAIT_ACTIVE"

sparseAudit = "VERIFY_NODE_LIST"

}

}

}

}

}The examples in this section are intended to demonstrate common deployment topologies and the node deploy configuration required. They are not intended as templates for deploying highly available business solutions, which require network and hardware redundancy to also be considered.

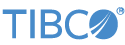

This example configuration has these characteristics:

-

All clients connect to node A.

-

All client processing is done on node A.

-

A static data distribution policy is used to replicate data from node A to node B to ensure no data loss due to a failure.

-

Node B takes over all processing if node A fails. Clients reconnect to node B to avoid a service outage.

-

No quorum policy is configured.

Example 3. Active/stand-by node deploy configuration

name = "two-node-active-standby"

version = "1.0"

type = "com.tibco.ep.dtm.configuration.node"

configuration =

{

NodeDeploy =

{

nodes =

{

"A.X" =

{

availabilityZoneMemberships =

{

"active-stand-by" =

{

staticPartitionBindings =

{

"partition" =

{

type = "ACTIVE"

}

}

}

}

}

"B.X" =

{

availabilityZoneMemberships =

{

"active-stand-by" =

{

staticPartitionBindings =

{

"partition" =

{

type = "REPLICA"

}

}

}

}

}

}

availabilityZones =

{

"active-stand-by" =

{

dataDistributionPolicy = "static-data-distribution-policy"

staticPartitionPolicy =

{

staticPartitions =

{

"partition" = { }

}

}

}

}

}

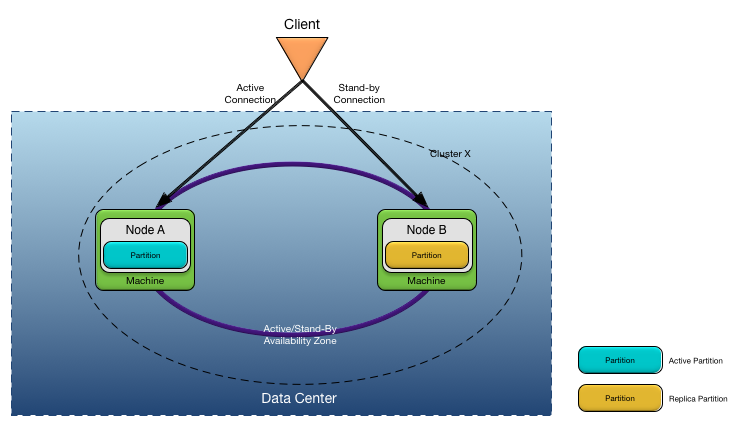

}This example configuration has these characteristics:

-

Clients can connect to either node.

-

Processing is done on both nodes.

-

A static data distribution policy is used to partition and replicate data to distributed the data across both nodes and to ensure no data loss due to a failure.

-

No quorum policy is configured.

Example 4. Two node active/active node deploy configuration

name = "two-node-active-active"

version = "1.0"

type = "com.tibco.ep.dtm.configuration.node"

configuration =

{

NodeDeploy =

{

nodes =

{

"A.X" =

{

availabilityZoneMemberships =

{

"active-active" =

{

staticPartitionBindings =

{

"P1" =

{

type = "ACTIVE"

},

"P2" =

{

type = "REPLICA"

}

}

}

}

}

"B.X" =

{

availabilityZoneMemberships =

{

"active-active" =

{

staticPartitionBindings =

{

"P1" =

{

type = "REPLICA"

},

"P2" =

{

type = "ACTIVE"

}

}

}

}

}

}

availabilityZones =

{

"active-active" =

{

dataDistributionPolicy = "static-data-distribution-policy"

staticPartitionPolicy =

{

enableOrder = "CONFIGURATION"

disableOrder = "REVERSE_CONFIGURATION"

staticPartitions =

{

"P1" = { },

"P2" = { },

}

}

}

}

}

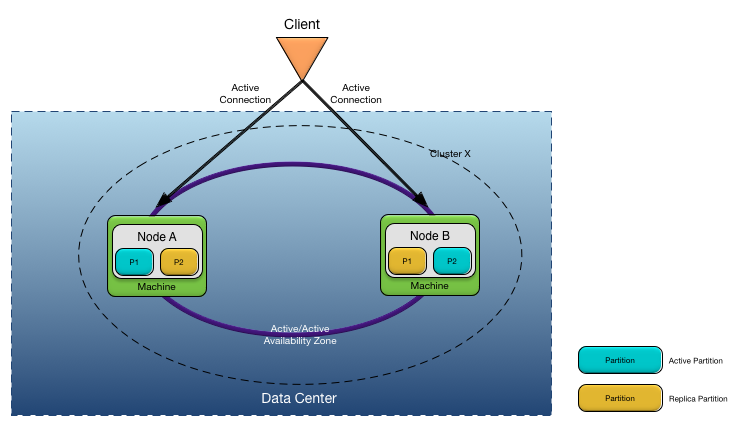

}This example configuration has these characteristics:

-

Clients can connect to any node.

-

Processing is done on all nodes.

-

A static data distribution policy is used to partition and replicate data to evenly distribute the data across all nodes and to ensure no data loss due to a failure.

-

A quorum policy is configured to avoid multi-master.

Example 5. Three node active/active node deploy configuration

name = "three-node-active-active"

version = "1.0"

type = "com.tibco.ep.dtm.configuration.node"

configuration =

{

NodeDeploy =

{

nodes =

{

"A.X" =

{

availabilityZoneMemberships =

{

"active-active" =

{

votes = 1

staticPartitionBindings =

{

"P1" =

{

type = "ACTIVE"

},

"P2" =

{

type = "REPLICA"

}

}

}

}

}

"B.X" =

{

availabilityZoneMemberships =

{

"active-active" =

{

votes = 1

staticPartitionBindings =

{

"P2" =

{

type = "ACTIVE"

},

"P3" =

{

type = "REPLICA"

}

}

}

}

},

"C.X" =

{

availabilityZoneMemberships =

{

"active-active" =

{

votes = 1

staticPartitionBindings =

{

"P1" =

{

type = "REPLICA"

},

"P3" =

{

type = "ACTIVE"

}

}

}

}

}

}

availabilityZones =

{

"active-active" =

{

dataDistributionPolicy = "static-data-distribution-policy"

minimumNumberOfVotes = 2

staticPartitionPolicy =

{

enableOrder = "CONFIGURATION"

disableOrder = "REVERSE_CONFIGURATION"

staticPartitions =

{

"P1" = { },

"P2" = { },

"P3" = { }

}

}

}

}

}

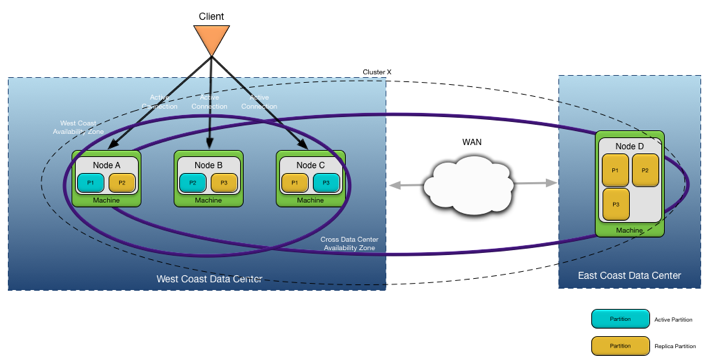

}This example configuration has these characteristics:

-

Clients can connect to any node in the west coast data center.

-

Processing is done on all nodes in the west coast data center.

-

A static data distribution policy is used to replicate data in both the west coast data center and across the WAN to the east coast data center for disaster recovery.

-

Replication to the east coast data center is done asynchronously to minimize processing latency.

-

A quorum policy is configured only for the nodes in the

West Coastavailability zone. The node in the east coast data center does not participate in the quorum.

Example 6. Three node active/active with disaster recovery node deploy configuration

name = "three-node-active-active-dr"

version = "1.0"

type = "com.tibco.ep.dtm.configuration.node"

configuration =

{

NodeDeploy =

{

nodes =

{

"A.X" =

{

availabilityZoneMemberships =

{

"active-active" =

{

staticPartitionBindings =

{

"P1" =

{

type = "ACTIVE"

},

"P2" =

{

type = "REPLICA"

}

}

},

"west-coast-data-center-nodes" =

{

votes = 1

}

}

}

"B.X" =

{

availabilityZoneMemberships =

{

"active-active" =

{

staticPartitionBindings =

{

"P2" =

{

type = "ACTIVE"

},

"P3" =

{

type = "REPLICA"

}

}

}

"west-coast-data-center-nodes" =

{

votes = 1

}

}

},

"C.X" =

{

availabilityZoneMemberships =

{

"active-active" =

{

staticPartitionBindings =

{

"P1" =

{

type = "REPLICA"

},

"P3" =

{

type = "ACTIVE"

}

}

}

"west-coast-data-center-nodes" =

{

votes = 1

}

}

}

"D.X" =

{

availabilityZoneMemberships =

{

"active-active" =

{

staticPartitionBindings =

{

"P1" =

{

type = "REPLICA"

replication = "ASYNCHRONOUS"

},

"P2" =

{

type = "REPLICA"

replication = "ASYNCHRONOUS"

},

"P3" =

{

type = "REPLICA"

replication = "ASYNCHRONOUS"

}

}

}

}

}

}

availabilityZones =

{

"active-active" =

{

dataDistributionPolicy = "static-data-distribution-policy"

staticPartitionPolicy =

{

enableOrder = "CONFIGURATION"

disableOrder = "REVERSE_CONFIGURATION"

staticPartitions =

{

"P1" = { },

"P2" = { },

"P3" = { }

}

}

}

"west-coast-data-center-nodes" =

{

minimumNumberOfVotes = 2

}

}

}

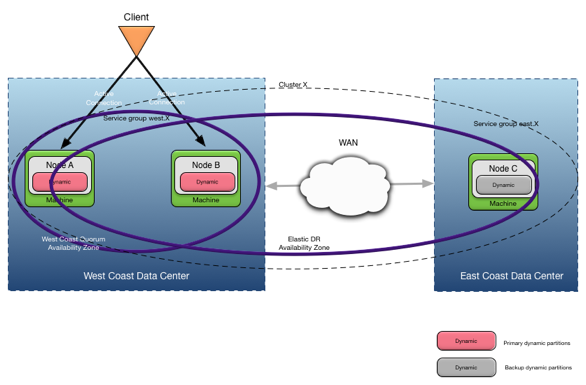

}This example configuration has these characteristics:

-

Clients connect to the nodes in the west coast data center.

-

Processing is done on any node in the west coast data center.

-

A dynamic data distribution policy is used to evenly distributed the data over the nodes in the west coast data center.

-

Replication to the east coast data center is for disaster recovery. Replication is done asynchronously to minimize processing latency.

-

A quorum policy is configured only for the nodes in the west coast data center. The node in the east coast data center does not participate in the quorum.

-

Service groups are used to support pattern matching for quorum membership and dynamic data distribution policy roles.

Example 7. Elastic scaling with disaster recovery node deploy configuration

name = "elastic-dr"

version = "1.0"

type = "com.tibco.ep.dtm.configuration.node"

configuration =

{

NodeDeploy =

{

nodes =

{

"A.west.X" =

{

availabilityZoneMemberships =

{

"elastic-dr" = { }

}

}

"B.west.X" =

{

availabilityZoneMemberships =

{

"elastic-dr" = { }

}

}

"C.east.X" =

{

availabilityZoneMemberships =

{

"elastic-dr" = { }

}

}

}

availabilityZones =

{

"elastic-dr" =

{

dataDistributionPolicy = "dynamic-data-distribution-policy"

dynamicPartitionPolicy =

{

primaryMemberPattern = "*.west.X"

backupMemberPattern = "*.east.X"

}

}

"west-coast-quorum" =

{

minimiumNumberOfVotes = 2

memberPattern = "*.west.X"

}

}

}

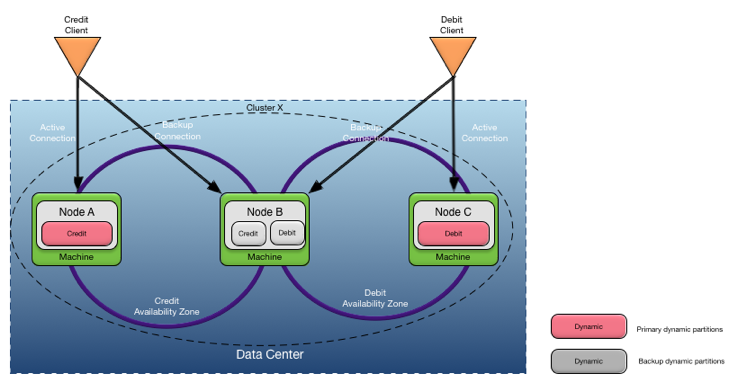

}This example configuration has these characteristics:

-

Credit clients connect to the primary processing node for credit —

Node A. -

Debit clients connect to the primary processing node for debit —

Node C. -

A single node provides redundancy for both the credit and debit processing nodes —

Node B. -

Separate dynamic data distribution polices are used for the credit and debit data.

-

Credit and debit processing is separated into two availability zones.

-

No quorum is configured.

Example 8. Elastic scaling with multiple availability zones node deploy configuration

name = "elastic-multi-zone"

version = "1.0"

type = "com.tibco.ep.dtm.configuration.node"

configuration =

{

NodeDeploy =

{

nodes =

{

"A.X" =

{

availabilityZoneMemberships =

{

"elastic-credit" =

{

dynamicPartitionBinding =

{

type = "PRIMARY"

}

}

}

}

"B.X" =

{

availabilityZoneMemberships =

{

"elastic-credit" =

{

dynamicPartitionBinding =

{

type = "BACKUP"

}

},

"elastic-debit" =

{

dynamicPartitionBinding =

{

type = "BACKUP"

}

}

}

}

"C.X" =

{

availabilityZoneMemberships =

{

"elastic-debit" =

{

dynamicPartitionBinding =

{

type = "PRIMARY"

}

}

}

}

availabilityZones =

{

"elastic-credit" =

{

dataDistributionPolicy = "dynamic-credit-distribution-policy"

}

"elastic-debit" =

{

dataDistributionPolicy = "dynamic-debit-distribution-policy"

}

}

}

}