Decision Tree Output Troubleshooting

When you analyze the results of a Decision Tree Model, keep in mind the most important assessment factors.

Assessing a Decision Tree

When analyzing the results of a Decision Tree Model, the most important assessment factors are as follows.

There are a few Decision Tree Operator output results that would make a modeler question the validity of the Decision Tree model, including single-tree nodes, repetition, and replication.

Single-node trees, repetition, or replication conditions might indicate to the modeler that a different form of modeling should be used instead of Decision Trees.

- Single Tree Nodes

- For the extreme case of data that does not have any sort of classification structure to it, the Decision Tree output would simply be a single-node tree that assigns elements the label of the most frequently occurring class.

- Repetition

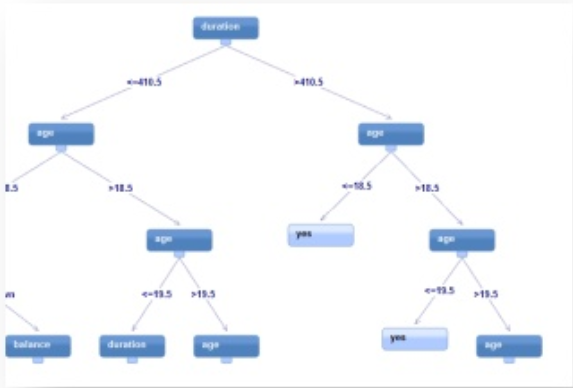

- Another anomaly that might be seen for Decision Tree output is a tree with repetition, whereby an attribute is repeatedly tested along a given branch of a tree. For example, the following tree shows the attribute age repeatedly being compared to different threshold values down the tree.

- Replication

- Another problem to watch out for is when replication or duplicate sub-trees exist within a tree. This issue could be potentially solved by creating combination variables, or it could be an acceptable model with the replication.

Copyright © 2021. Cloud Software Group, Inc. All Rights Reserved.