Converts an HDFS tabular data set to the Spotfire binary data frame (SBDF) format. The SBDF files are stored in the same workspace as the workflow and can be downloaded for use in TIBCO Spotfire.

Information at a Glance

| Category

|

Tools

|

| Data source type

|

HD

|

| Sends output to other operators

|

No

|

| Data processing tool

|

n/a

|

Note: The Export to SBDF (HD) operator is for Hadoop data only. For database data, use the

Export to SBDF (DB) operator.

Input

A single HDFS tabular data set. Avro and Parquet inputs are not supported.

- Bad or Missing Values

- Null and empty values in the inputs are converted to blank cells in the SBDF file.

Restrictions

By default, the custom operator can export only up to 10GB of data. You can modify this limit in the

alpine.conf file by setting the following configuration.

Note: Raising this limit might overload the memory already reserved for

Team Studio.

custom_operator.sbdf_export.max_mb_input_size_alpine_server=10240

Configuration

| Parameter

|

Description

|

| Notes

|

Any notes or helpful information about this operator's parameter settings. When you enter content in the

Notes field, a yellow asterisk is displayed on the operator.

|

| File Output Name

*required

|

Specify the name of the SBDF file stored in the

Team Studio workspace. Note that the .sbdf file extension should be omitted, because it is automatically appended to the file name.

|

| Overwrite if Exists

*required

|

Select

true or

false. If the SBDF file already exists, and if this option is set to true, the file is overwritten. A new version of the SBDF file is created in the workspace, and previous versions are still accessible through the work file version-control button.

false: If a work file of the same name already exists, the operator fails with an output error message that notifies the user of the existing work file.

|

Output

- Visual Output

-

- Data Output

- None. This is a terminal operator.

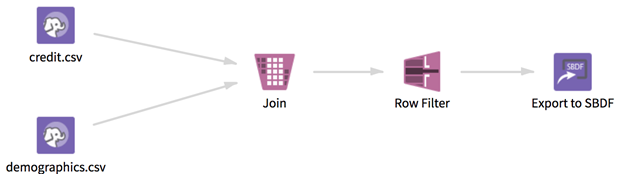

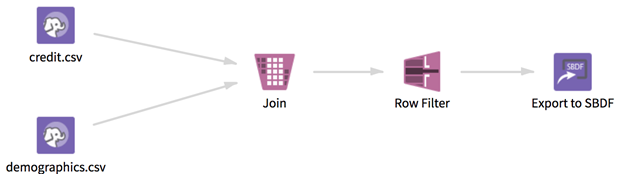

Example

Copyright © Cloud Software Group, Inc. All rights reserved.