Window Functions - Aggregate

Unlike regular aggregate functions calls, allows you to create aggregate variables for each input row, based on the specified frame (with an optional order).

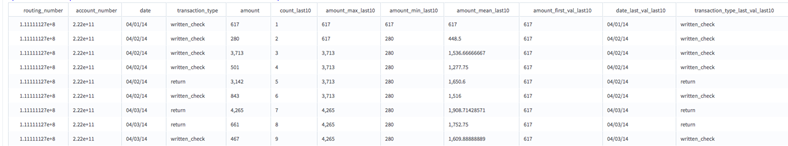

Information at a Glance

The Aggregate operator supports the count, max, min, sum, mean, first_value, and last_value functions. A potential use case might be determining the average number of sales per department store, averaging the amount over the last 30 days or just for the last 10 transactions.

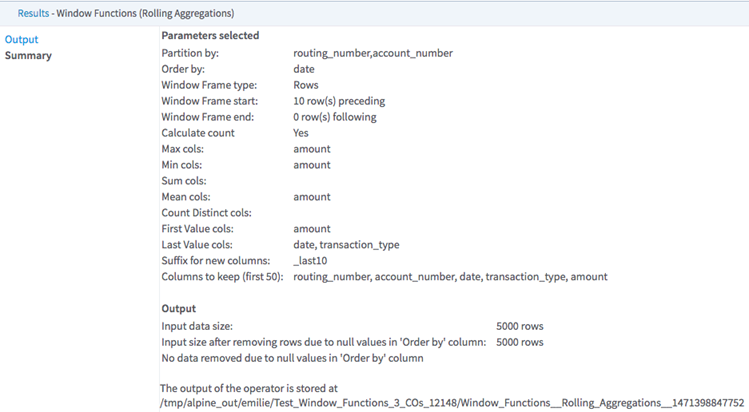

The window functions operators allow you compute database-like window functions on top of Hadoop by leveraging Spark SQL. To learn more about how window functions are implemented in Spark SQL, see this tutorial.

A window function calculates a return value for every input row of an input based on a specific group of user-defined rows called the frame. Every input row has a unique frame associated with it.

Team Studio provides two other distinct window functions operators: Rank and Lag/Lead.

Input

One data set from HDFS. The data set must have some numeric columns on which to compute numeric aggregations, and might include partition by column(s) and order by columns of any type. Datetime columns must have a format specified in the input (for example, Datetime 'MM/dd/yy').

- Bad or Missing Values

- Dirty data: When parsing delimited data, the window functions operators remove dirty data (such as strings in numeric columns, doubles in integer columns, or rows with the incorrect number of values) as it parses. These rows are silently removed because Spark is incapable of handling them.

Null values: Before calculating any of the window functions, the operator filters any rows that contain null values in the Order By column selected. The operator then processes these rows with null values according to the value of the Write Rows Removed Due to Null Data To File parameter. The number of rows removed due to null data is reported in the Summary tab of the visual output (if you do not select Do Not Write or Count Null Rows (Fastest).

Restrictions

Wide data: This operator works quickly on long data, but performance might slow down dramatically if window functions are calculated on thousands of columns. Increasing Spark's executor memory might improve performance.

Datetime columns: Input datetime columns must have a format specified in the input (for example, Datetime 'MM/dd/yy'); otherwise, the operator returns null values for the whole column.

Configuration

| Parameter | Description |

|---|---|

| Notes | Any notes or helpful information about this operator's parameter settings. When you enter content in the Notes field, a yellow asterisk is displayed on the operator. |

| Partition By | The column(s) to partition by. You must enter a value for Partition By and/or Order By. |

| Order By | The column to order each partition by (all data types are supported).

You must enter a value for Partition By and/or Order By. |

| Window Frame Boundaries | Specify frame boundaries type of

Rows or

Range.

If Rows (the default) is selected, the specified Frame Start/Frame End value refers to the number of rows before/after the current row. If Range is selected, the specified Frame Start/Frame End value refers to the number of units off (in Order By column) before/after the current row. If Range is selected, the Order By column must be numeric. |

| Frame Start (inclusive) | Where the frame starts (rows or units off from the current row). |

| Frame End (inclusive) | Where the frame ends (rows or units off from the current row).

Default value is 0 (= current row). |

| Calculate Count | Specify whether the operator reports the number of rows in the frame selected from the current row.

Default value: No. |

| Find Maximum | The maximum value for each of these columns (within each partition and for the frame selected). |

| Find Minimum | The minimum value for each of these columns (within each partition and for the frame selected). |

| Calculate Sum | The sum for each of these columns (within each partition and for the frame selected). |

| Calculate Mean | The mean for each of these columns (within each partition and for the frame selected). |

| Find First Value | The first value for each of these columns (within each partition and for the frame selected). |

| Find Last Value | The last value for each of these columns (within each partition and for the frame selected). |

| Suffix for New Columns | A suffix to append to new columns created (optional). |

| Columns to Keep | The columns to keep in the output. |

| Write Rows Removed Due to Null Data To File

*required |

Rows with null values in the selected

Order By column are removed from the analysis. This parameter allows you to specify whether the data with null values is written to a file.

The file is written to the same directory as the rest of the output. The file name is suffixed with _baddata.

|

| Storage Format | Select the format in which to store the results. The storage format is determined by your type of operator.

Typical formats are Avro, CSV, TSV, or Parquet. |

| Compression | Select the type of compression for the output.

Available Avro compression options. |

| Output Directory | The location to store the output files. |

| Output Name | The name to contain the results. |

| Overwrite Output | Specifies whether to delete existing data at that path. |

| Advanced Spark Settings Automatic Optimization |

|

Output

- Visual Output

- Each operator returns visual output with two tabs: Output and Summary.